最新刊期

卷 24 , 期 10 , 2019

-

摘要:The visual simulation of crowd emergency evacuation is a method that uses agents to simulate individuals with autonomous perceptions, emotions, and behavioral abilities. With 3D visual means, it can visualize the emergency evacuation scenarios of the crowd. This study summarizes the research progress from the sources of crowd simulation data, the construction of the crowd navigation and behavior models, the crowd emotional contagion, and the crowd rendering. This study also discusses issues that must be studied from the perspective of the verifiability of the simulation model, the construction of the crowd evacuation navigation model and the physical model of humans and environments, the animal evacuation experiment and simulation, the social behavior of evacuation, and the visual calculation of crowd emotions. For the problems that must be studied in depth, results are as follows. The video surveillance analysis of emergency events and the user survey of virtual crowd scenarios can be used to improve the crowd simulation model. The analysis of animal evacuation experiments can improve the crowd navigation algorithm. The social behavior model can describe the diversity of the crowd evacuation behaviors in further details. The calculation method based on multichannel perceptions can describe the process of emotional contagion in further details. Visual simulation research on crowd emergency evacuation behaviors has important application prospects in the management of urban safety. However, numerous problems remain to be solved in this field. The comprehensive application of multidisciplinary knowledge and the improvement of experimental methods are the keys for future studies.关键词:crowd emergency evacuation;visual simulation;emotion contagion;crowd behavior;crowd navigation16|75|1更新时间:2024-05-07

摘要:The visual simulation of crowd emergency evacuation is a method that uses agents to simulate individuals with autonomous perceptions, emotions, and behavioral abilities. With 3D visual means, it can visualize the emergency evacuation scenarios of the crowd. This study summarizes the research progress from the sources of crowd simulation data, the construction of the crowd navigation and behavior models, the crowd emotional contagion, and the crowd rendering. This study also discusses issues that must be studied from the perspective of the verifiability of the simulation model, the construction of the crowd evacuation navigation model and the physical model of humans and environments, the animal evacuation experiment and simulation, the social behavior of evacuation, and the visual calculation of crowd emotions. For the problems that must be studied in depth, results are as follows. The video surveillance analysis of emergency events and the user survey of virtual crowd scenarios can be used to improve the crowd simulation model. The analysis of animal evacuation experiments can improve the crowd navigation algorithm. The social behavior model can describe the diversity of the crowd evacuation behaviors in further details. The calculation method based on multichannel perceptions can describe the process of emotional contagion in further details. Visual simulation research on crowd emergency evacuation behaviors has important application prospects in the management of urban safety. However, numerous problems remain to be solved in this field. The comprehensive application of multidisciplinary knowledge and the improvement of experimental methods are the keys for future studies.关键词:crowd emergency evacuation;visual simulation;emotion contagion;crowd behavior;crowd navigation16|75|1更新时间:2024-05-07

Scholar View

-

摘要:Sensor-based smoke detection techniques have been widely used in industrial applications. With the development of artificial intelligence, especially the successful commercial application of deep learning, the number of cases in which computer vision-based techniques are applied to smoke detection for fire alarm has increased. Computer vision techniques have not been used as substitutes of sensors in smoke detection systems because of frequent false and missed alarm. By improving computer capability and storage devices, several shortcomings in traditional video smoke detection have been improved or even solved, but these improvements are accompanied with new challenges. To keep up with the development of and latest research on smoke recognition, detection, and segmentation, this study focuses on related domestic and international literature published from 2017 to 2019. From the perspective of tasks and based on years of studying smoke detection, we divide forest fire alarm relying on smoke into three categories, namely, smoke recognition, detection, and segmentation. The three categories of tasks are of different grains and called smoke surveillance tasks. This study grain-wisely presents the latest methods of achieving the above-mentioned surveillance tasks in different aspects ranging from traditional techniques to deep ones. Concretely, related studies on coarse-grained surveillance tasks based on traditional algorithms are introduced first, followed by those on fine-grained tasks implemented by deep learning frameworks. Among the three surveillance tasks, smoke recognition is adopted as the basis. Hence, regarding smoke recognition, detection, and segmentation as recognition-based tasks in coarse-to-fine grain is reasonable. For instance, smoke recognition is the coarsest-grained task and smoke segmentation is the finest-grained recognition task among the three surveillance tasks. Given that the latest literature focuses more on detection and segmentation than on recognition, this study follows this trend and introduces methods of smoke region rough extraction, which obtains a candidate smoke region, and region refinement, which obtains the final detection or segmentation results. Furthermore, according to research, the most distinguishing characteristics of smoke are dynamic features, such as motion and diffusion, and the most stable and robust characteristics of smoke are static features, such as texture. Therefore, during the introduction to smoke region extraction, the extraction and leveraging of static and motion features are explored in every step to gain discriminative capability and robustness for accurate smoke recognition and location. Meanwhile, because deep learning methods tend to present end-to-end solutions rather than individual steps for surveillance tasks, introducing deep learning-based surveillance tasks step-wisely is difficult. Consequently, deep learning-based methods for surveillance tasks are introduced in another section grain-wisely. The overall frameworks and inner concepts are involved rather than the algorithm steps of deep learning-based smoke surveillance. Lastly, the strengths and weaknesses in smoke surveillance tasks are determined, and widely used evaluation indicators and several available datasets are summarized to allow researchers to search for evaluators and annotated datasets. Future development trends are also predicted. Through a comprehensive literature review of surveillance tasks in coarse-to-fine grain, the key techniques, problems to be solved, and promising research directions are demonstrated. Thus, potential solutions can be provided to surveillance task-based forest fire alarm. Further research based on this review might promote the industrial application of smoke surveillance tasks.关键词:smoke recognition;smoke detection;smoke segmentation;deep learning;review79|154|10更新时间:2024-05-07

摘要:Sensor-based smoke detection techniques have been widely used in industrial applications. With the development of artificial intelligence, especially the successful commercial application of deep learning, the number of cases in which computer vision-based techniques are applied to smoke detection for fire alarm has increased. Computer vision techniques have not been used as substitutes of sensors in smoke detection systems because of frequent false and missed alarm. By improving computer capability and storage devices, several shortcomings in traditional video smoke detection have been improved or even solved, but these improvements are accompanied with new challenges. To keep up with the development of and latest research on smoke recognition, detection, and segmentation, this study focuses on related domestic and international literature published from 2017 to 2019. From the perspective of tasks and based on years of studying smoke detection, we divide forest fire alarm relying on smoke into three categories, namely, smoke recognition, detection, and segmentation. The three categories of tasks are of different grains and called smoke surveillance tasks. This study grain-wisely presents the latest methods of achieving the above-mentioned surveillance tasks in different aspects ranging from traditional techniques to deep ones. Concretely, related studies on coarse-grained surveillance tasks based on traditional algorithms are introduced first, followed by those on fine-grained tasks implemented by deep learning frameworks. Among the three surveillance tasks, smoke recognition is adopted as the basis. Hence, regarding smoke recognition, detection, and segmentation as recognition-based tasks in coarse-to-fine grain is reasonable. For instance, smoke recognition is the coarsest-grained task and smoke segmentation is the finest-grained recognition task among the three surveillance tasks. Given that the latest literature focuses more on detection and segmentation than on recognition, this study follows this trend and introduces methods of smoke region rough extraction, which obtains a candidate smoke region, and region refinement, which obtains the final detection or segmentation results. Furthermore, according to research, the most distinguishing characteristics of smoke are dynamic features, such as motion and diffusion, and the most stable and robust characteristics of smoke are static features, such as texture. Therefore, during the introduction to smoke region extraction, the extraction and leveraging of static and motion features are explored in every step to gain discriminative capability and robustness for accurate smoke recognition and location. Meanwhile, because deep learning methods tend to present end-to-end solutions rather than individual steps for surveillance tasks, introducing deep learning-based surveillance tasks step-wisely is difficult. Consequently, deep learning-based methods for surveillance tasks are introduced in another section grain-wisely. The overall frameworks and inner concepts are involved rather than the algorithm steps of deep learning-based smoke surveillance. Lastly, the strengths and weaknesses in smoke surveillance tasks are determined, and widely used evaluation indicators and several available datasets are summarized to allow researchers to search for evaluators and annotated datasets. Future development trends are also predicted. Through a comprehensive literature review of surveillance tasks in coarse-to-fine grain, the key techniques, problems to be solved, and promising research directions are demonstrated. Thus, potential solutions can be provided to surveillance task-based forest fire alarm. Further research based on this review might promote the industrial application of smoke surveillance tasks.关键词:smoke recognition;smoke detection;smoke segmentation;deep learning;review79|154|10更新时间:2024-05-07 -

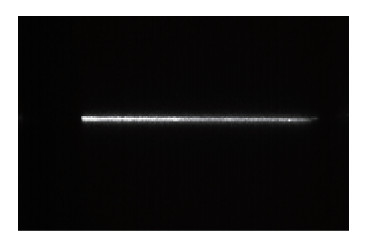

摘要:ObjectiveSmoke detection by surveillance cameras is reasonable to warn fire. This technology has many advantages compared with other traditional point detectors. Wide areas could be covered, rapid respondence could be available, and installation and maintenance requirements could be less. However, the current smoke detection algorithms are unsatisfying in terms of accuracy and sensitivity due to the varying colors, shapes, and textures of smoke. The traditional studies focus on designing handcrafted features that extract such static features as colors, shapes, and textures and dynamic ones, including shape deforming, drifting, and frequency shifting. This task is time consuming. Although the algorithm exhibits good characteristics, maintaining its robustness for all environments is difficult. The detection effectiveness often sharply descends when these methods are applied in different environments. The fashionable methods, such as convolution neural network (CNN), recurrent neural network (RNN), and other statistical methods, are based on deep learning. However, applying these methods is difficult because the surveillance platforms have limited resources. These networks are also unsatisfying in terms of accuracy and sensitivity.MethodThe proposed method utilizes trajectories in condensed images, which are summed in horizontal and vertical directions for all video pixels. Smoke trajectories in condensed images are always right-leaning, straightly linear, proportional, and streamline-like with low frequencies and fixed starting points. Accordingly, surveillance videos are summed into condensed images, sliced, and then fed into CNN to extract features to find the long-term relationship by RNN. Partitioning strategy is also adopted to improve sensitivity. Therefore, the method uses not only the trajectory shapes but also the short- and long-range relationships in the time domain to detect the existence of smoke in videos.ResultControlled experiments of CNN, C3D(3d convolutional networks), traj + SVM(support vector machine), traj + RNNs, and traj + CNN + RNNs are conducted. The CNN and C3D methods are typical deep learning networks that initially extract features and then make judgments. The traj + SVM method detects smoke trajectories by traditional SVM algorithm, the traj + RNNs method finds smoke trajectories by RNNs, and the traj + CNN + RNNs method recognizes smoke trajectories by combining CNN and RNNs, which is the proposed method. The accuracy of the traj + CNN + RNNs method is increased by 35.2% compared with that of traj + SVM, and the real negative rate is increased by 15.6%. However, the computing cost of the traj + CNN + RNNs method is relatively high. The frame rate, maximum memory consumption, and network weight are 49 frame/s, 2.31 GB, and 261 MB, respectively. By contrast, the frame rate of traj + SVM is 178 frame/s. The computing cost of deep learning networks is generally high. Nevertheless, the traj + CNN + RNNs method is the lightest and fastest among all deep learning networks. Some confusing data for many traditional methods are collected for the second experiment to further compare these methods. The methods based on trajectories, namely, traj + SVM, traj + RNNs, and traj + CNN + RNNs, remain at a good level, and the indexes of ACC(accaracy), TPR(trure positive rate), and TNR(true negative rate) and the sensitivity are 0.853, 0.847, 0.872, and 52/26(frame/s), respectively. However, the corresponding indexes of CNN and C3D considerably reduced. The accuracies of CNN and C3D are 0.585 and 0.716, respectively.ConclusionThe proposed method helps improve the accuracy and sensitivity of smoke detection. The smoke trajectories can be identified from the condensed images, even from those of early smoke, which are helpful for early fire warning.关键词:fire;smoke;summed trajectory;feature recognition;deep learning;recurrent neural network(RNN)22|43|3更新时间:2024-05-07

摘要:ObjectiveSmoke detection by surveillance cameras is reasonable to warn fire. This technology has many advantages compared with other traditional point detectors. Wide areas could be covered, rapid respondence could be available, and installation and maintenance requirements could be less. However, the current smoke detection algorithms are unsatisfying in terms of accuracy and sensitivity due to the varying colors, shapes, and textures of smoke. The traditional studies focus on designing handcrafted features that extract such static features as colors, shapes, and textures and dynamic ones, including shape deforming, drifting, and frequency shifting. This task is time consuming. Although the algorithm exhibits good characteristics, maintaining its robustness for all environments is difficult. The detection effectiveness often sharply descends when these methods are applied in different environments. The fashionable methods, such as convolution neural network (CNN), recurrent neural network (RNN), and other statistical methods, are based on deep learning. However, applying these methods is difficult because the surveillance platforms have limited resources. These networks are also unsatisfying in terms of accuracy and sensitivity.MethodThe proposed method utilizes trajectories in condensed images, which are summed in horizontal and vertical directions for all video pixels. Smoke trajectories in condensed images are always right-leaning, straightly linear, proportional, and streamline-like with low frequencies and fixed starting points. Accordingly, surveillance videos are summed into condensed images, sliced, and then fed into CNN to extract features to find the long-term relationship by RNN. Partitioning strategy is also adopted to improve sensitivity. Therefore, the method uses not only the trajectory shapes but also the short- and long-range relationships in the time domain to detect the existence of smoke in videos.ResultControlled experiments of CNN, C3D(3d convolutional networks), traj + SVM(support vector machine), traj + RNNs, and traj + CNN + RNNs are conducted. The CNN and C3D methods are typical deep learning networks that initially extract features and then make judgments. The traj + SVM method detects smoke trajectories by traditional SVM algorithm, the traj + RNNs method finds smoke trajectories by RNNs, and the traj + CNN + RNNs method recognizes smoke trajectories by combining CNN and RNNs, which is the proposed method. The accuracy of the traj + CNN + RNNs method is increased by 35.2% compared with that of traj + SVM, and the real negative rate is increased by 15.6%. However, the computing cost of the traj + CNN + RNNs method is relatively high. The frame rate, maximum memory consumption, and network weight are 49 frame/s, 2.31 GB, and 261 MB, respectively. By contrast, the frame rate of traj + SVM is 178 frame/s. The computing cost of deep learning networks is generally high. Nevertheless, the traj + CNN + RNNs method is the lightest and fastest among all deep learning networks. Some confusing data for many traditional methods are collected for the second experiment to further compare these methods. The methods based on trajectories, namely, traj + SVM, traj + RNNs, and traj + CNN + RNNs, remain at a good level, and the indexes of ACC(accaracy), TPR(trure positive rate), and TNR(true negative rate) and the sensitivity are 0.853, 0.847, 0.872, and 52/26(frame/s), respectively. However, the corresponding indexes of CNN and C3D considerably reduced. The accuracies of CNN and C3D are 0.585 and 0.716, respectively.ConclusionThe proposed method helps improve the accuracy and sensitivity of smoke detection. The smoke trajectories can be identified from the condensed images, even from those of early smoke, which are helpful for early fire warning.关键词:fire;smoke;summed trajectory;feature recognition;deep learning;recurrent neural network(RNN)22|43|3更新时间:2024-05-07 -

摘要:ObjectiveVideo smoke detection plays an important role in real-time fire alarms by solving the limitation of applying sensors in large spaces, outdoors, and other types of environment with strong air turbulence. Current video-based methods mainly extract the static and dynamic features of smoke and process them with the same structured model, which may disregard its continuous information and unstructured feature properties. Graph convolutional networks (GCNs) and neural ordinary differential equations (ODEs) exhibit powerful strength on processing non-Euclidean structures and continuous timeline models. Therefore, these methods can ultimately be utilized in video smoke detection. On account of the success of these new methods, we propose a flow-based continuous graph model for video smoke detection.MethodIn this study, we constructed a continuous timeline model using a neural ODE network, while most methods in the video smoke detection domain remain focused on discrete spatial-temporal features in the Euclidean space. We considered video frames with fixed time spans as sample points on a continuous timeline. By simulating the latent space of hidden variables through the latent time series model, we could obtain the hidden information between frames, which may be disregarded in discrete models. When the model was established, the lost between-frames information and the short-term future frame can be predicted. Through this procedure, we could effectively advance fire alarms. For the detection functions, we used GCNs to extract the feature of the video frame (or block), which will be trained for classification by utilizing fully and weakly supervised methods. Considering the lack of smoke labels for bounding boxes or pixel-level ground truths in real smoke video datasets, we pretrained our model on a number of smoke images and used it to predict the label of sliding windows in a labeled video frame. This process was conducted to find the origin fire point or predict the motion information of smoke.ResultWe compare our model with seven state-of-the-art models of video smoke detection and five image-detection models, including the traditional approaches and deep-learning methods on two video and four image datasets. The video data are collected from KMU, Bilkent, USTC, and Yuan. The quantitative evaluation metrics contain detection rate (DR), accuracy rate (AR), false alarm rate, average true positive rate (ATPR), average false positive rate, average true negative rate, and F-measure (F2). We provide several latent models of each method for comparisons. Experimental results show that our model outperforms most of other methods in KMU and the Yuan datasets. The visualized detection samples show that our model can capture the dynamic motion feature of smoke and predict the origin fire point by combining these features. Comparative experiments demonstrate that the continuous model improves smoke detection accuracy. Compared with the 3D parallel convolutional network and other results in the KMU video dataset, ATPRs increase by 0.6%. Compared with DMCNN and other results in the Yuan image datasets, obtained ARs increase by 0.21% and 0.06% on image datasets, respectively. Although the results of the state-of-art models are over 98%, we also achieve DR increases by 0.54% and 0.28%. In addition, we conduct a series of experiments in the Bilkent video datasets to verify the effectiveness and robustness of our latent model on the prediction of smoke motion. As shown in the separated screenshots of the real smoke videos, we initially sample several frames randomly and slide the bounding box window to divide the image block and predict their labels using our continuous graph convolutional model. We use the pretrained model given that the real smoke videos do not have specific labels for bounding boxes or pixels. Thereafter, we feed the center point of these samples to our latent model and predict the labels of the bounding boxes in the current image. Through visualizing the smoke areas detected by our model, we find that our latent model correctly tracks the diffusion direction of smoke and updates its locations. By reversing the timeline fed to the latent model, we can obtain the trajectory of smoke fusion back to its origin point. Therefore, the effectiveness of our latent model to predict smoke motion and infer the origin fire point is demonstrated. However, a quantized verification has not been conducted yet.ConclusionIn this study, we propose a video-based continuous graph convolutional model that combines the strength of structured and unstructured models. We also capture the dynamic information of smoke and effectively predict the origin fire point. Experiment results show that our model outperforms several state-of-the-art approaches of video and image smoke detection.关键词:video smoke detection;smoke recognition;graph convolutional network(GCN);neural ordinary differential equations;metric learning;weakly supervised learning23|42|2更新时间:2024-05-07

摘要:ObjectiveVideo smoke detection plays an important role in real-time fire alarms by solving the limitation of applying sensors in large spaces, outdoors, and other types of environment with strong air turbulence. Current video-based methods mainly extract the static and dynamic features of smoke and process them with the same structured model, which may disregard its continuous information and unstructured feature properties. Graph convolutional networks (GCNs) and neural ordinary differential equations (ODEs) exhibit powerful strength on processing non-Euclidean structures and continuous timeline models. Therefore, these methods can ultimately be utilized in video smoke detection. On account of the success of these new methods, we propose a flow-based continuous graph model for video smoke detection.MethodIn this study, we constructed a continuous timeline model using a neural ODE network, while most methods in the video smoke detection domain remain focused on discrete spatial-temporal features in the Euclidean space. We considered video frames with fixed time spans as sample points on a continuous timeline. By simulating the latent space of hidden variables through the latent time series model, we could obtain the hidden information between frames, which may be disregarded in discrete models. When the model was established, the lost between-frames information and the short-term future frame can be predicted. Through this procedure, we could effectively advance fire alarms. For the detection functions, we used GCNs to extract the feature of the video frame (or block), which will be trained for classification by utilizing fully and weakly supervised methods. Considering the lack of smoke labels for bounding boxes or pixel-level ground truths in real smoke video datasets, we pretrained our model on a number of smoke images and used it to predict the label of sliding windows in a labeled video frame. This process was conducted to find the origin fire point or predict the motion information of smoke.ResultWe compare our model with seven state-of-the-art models of video smoke detection and five image-detection models, including the traditional approaches and deep-learning methods on two video and four image datasets. The video data are collected from KMU, Bilkent, USTC, and Yuan. The quantitative evaluation metrics contain detection rate (DR), accuracy rate (AR), false alarm rate, average true positive rate (ATPR), average false positive rate, average true negative rate, and F-measure (F2). We provide several latent models of each method for comparisons. Experimental results show that our model outperforms most of other methods in KMU and the Yuan datasets. The visualized detection samples show that our model can capture the dynamic motion feature of smoke and predict the origin fire point by combining these features. Comparative experiments demonstrate that the continuous model improves smoke detection accuracy. Compared with the 3D parallel convolutional network and other results in the KMU video dataset, ATPRs increase by 0.6%. Compared with DMCNN and other results in the Yuan image datasets, obtained ARs increase by 0.21% and 0.06% on image datasets, respectively. Although the results of the state-of-art models are over 98%, we also achieve DR increases by 0.54% and 0.28%. In addition, we conduct a series of experiments in the Bilkent video datasets to verify the effectiveness and robustness of our latent model on the prediction of smoke motion. As shown in the separated screenshots of the real smoke videos, we initially sample several frames randomly and slide the bounding box window to divide the image block and predict their labels using our continuous graph convolutional model. We use the pretrained model given that the real smoke videos do not have specific labels for bounding boxes or pixels. Thereafter, we feed the center point of these samples to our latent model and predict the labels of the bounding boxes in the current image. Through visualizing the smoke areas detected by our model, we find that our latent model correctly tracks the diffusion direction of smoke and updates its locations. By reversing the timeline fed to the latent model, we can obtain the trajectory of smoke fusion back to its origin point. Therefore, the effectiveness of our latent model to predict smoke motion and infer the origin fire point is demonstrated. However, a quantized verification has not been conducted yet.ConclusionIn this study, we propose a video-based continuous graph convolutional model that combines the strength of structured and unstructured models. We also capture the dynamic information of smoke and effectively predict the origin fire point. Experiment results show that our model outperforms several state-of-the-art approaches of video and image smoke detection.关键词:video smoke detection;smoke recognition;graph convolutional network(GCN);neural ordinary differential equations;metric learning;weakly supervised learning23|42|2更新时间:2024-05-07

Fire and Smoke Special Forum

-

摘要:ObjectiveHeart rate is an important physiological parameter that reflects the cardiovascular condition and mental state of the human body. Traditional techniques for heart rate detection need pressure sensors or optical sensors attached with human skin. However, the contact between sensors and the human skin tends to result in inconvenience for subjects, especially for those with skin diseases. Accordingly, using traditional techniques in our daily lives is difficult. With the improvement of technology for bio-image information processing, a non-contact heart rate detection method based on imaging photoplethysmography has become an attractive research focus. The color of human epidermis changes in a subtle way with the rhythm of heart beat. These changes are invisible to human eyes and can be captured by a webcam for heart rate estimation. Current technologies for non-contact heart rate detection prioritize facial skin due to its dense capillary distribution and the significant improvement of face tracking technology. However, in realistic environments involving facial motions, the precision of heart rate detected fails to meet the requirements. In recent years, various methods, such as independent principal component analysis, adaptive filtering, and wavelet transform, have been proposed to address this problem. After obtaining initial chrominance signals from different channels of a video, the independent principal component analysis algorithm extracts mutually independent source signals from the initial signals. One of the source signals represents the pulsation of heart beat. However, the source signals obtained are still corrupted by abundant noise. The independent principal component analysis algorithm is complicated, which hinders their widespread application. Adaptive filtering is generally based on the least mean square algorithm. Noises mixed in the chrominance signal can be removed by adaptively adjusting the parameters of the filter, regardless of the noise characteristics. However, this method can merely filter out Gaussian white noise, rather than the sharp noise caused by motion interference in practice. Wavelet transformation method decomposes the original blood volume pulse signal into a series of frequency bands. The method then selects the signals limited in the heart rate band and integrates them into a desirable signal, from which heart rate can be estimated by later processes. Nevertheless, the power spectral density function of the sharp noise caused by motion interference overwhelms the entire heart rate band, which cannot be discarded thoroughly by wavelet transformation. Overall, existing non-contact heart rate detection technologies fail to effectively filter out the sharp noise caused by facial motions, and their results are inaccurate.MethodTo tackle above-mentioned problems, this work proposes a novel method for detection of facial motions. Discriminative response map fitting and Kanede-Lucas-Tomasi algorithm are used in video to detect and track the facial regions. Some facial areas (e.g., eyes) do not contain information about heart rate. Thus the face is divided into several sub-regions, and these sub-regions are then studied respectively, with the aim of mitigating the impact of uninteresting areas. Chrominance characteristics of each sub-region are extracted from the video to establish the raw blood volume pulse matrix. In theoretical aspect, the ideal blood volume pulse matrix should be low-rank. However, in practice, the expression change at a certain moment may make the blood volume pulse distorted abruptly in corresponding time. The rank of the blood volume pulse matrix increase as the result of the distortion of local elements. This work performs the adaptive signal recovery algorithm on the damaged blood volume pulse matrix to discard abnormal elements arising from facial motions and reconstruct the row-rank matrix. From the matrix, sub-regions rich in information about heart rate are selected to compose the desirable blood volume pulse signal. Heart rate can be calculated according to the power spectrum density of the desirable blood volume pulse signal.ResultAbout 211 videos from 30 subjects are recorded under natural ambient lighting condition. Three series of experiments are conducted on the proposed method to verify its validity:experiments focusing on heart rate accuracy in static scenarios and dynamic scenarios, experiments focusing on method stability with different video durations and frame rates, and a 10 minutes experiment for long-term heart rate monitoring. Results of the accuracy experiments show that the Pearson correlation coefficient between the estimated heart rate and the ground truth is 0.990 2 in static scenarios and 0.960 5 in dynamic scenarios. The heart rate estimated by the proposed method highly approximates to the ground truth. Compared with the newest method, our method decreases the error rates by 53.90% and increases the Pearson correlation coefficient by 7.46%. The stability experiments show that the proposed method performs well as long as the duration of videos is higher than 8 seconds or the frame rate is higher than 20 frames per second. The long-term heart rate monitoring shows that the heart rate measured by the proposed method has similar fluctuations with the ground truth.ConclusionThe proposed method, which is proved to be superior to state-of-the-art ones, can dispose of expression disturbances and increase the detection accuracy of heart rate. However, in cases involving sudden illumination changes, the accuracy of the proposed method fails to reach the high level, which is planned to be further improved in our future works.关键词:imaging photoplethysmography(IPPG);facial motions;heart rate detection;self-adaptive signal recovery(SSR);desirable blood volume pulse signal37|115|3更新时间:2024-05-07

摘要:ObjectiveHeart rate is an important physiological parameter that reflects the cardiovascular condition and mental state of the human body. Traditional techniques for heart rate detection need pressure sensors or optical sensors attached with human skin. However, the contact between sensors and the human skin tends to result in inconvenience for subjects, especially for those with skin diseases. Accordingly, using traditional techniques in our daily lives is difficult. With the improvement of technology for bio-image information processing, a non-contact heart rate detection method based on imaging photoplethysmography has become an attractive research focus. The color of human epidermis changes in a subtle way with the rhythm of heart beat. These changes are invisible to human eyes and can be captured by a webcam for heart rate estimation. Current technologies for non-contact heart rate detection prioritize facial skin due to its dense capillary distribution and the significant improvement of face tracking technology. However, in realistic environments involving facial motions, the precision of heart rate detected fails to meet the requirements. In recent years, various methods, such as independent principal component analysis, adaptive filtering, and wavelet transform, have been proposed to address this problem. After obtaining initial chrominance signals from different channels of a video, the independent principal component analysis algorithm extracts mutually independent source signals from the initial signals. One of the source signals represents the pulsation of heart beat. However, the source signals obtained are still corrupted by abundant noise. The independent principal component analysis algorithm is complicated, which hinders their widespread application. Adaptive filtering is generally based on the least mean square algorithm. Noises mixed in the chrominance signal can be removed by adaptively adjusting the parameters of the filter, regardless of the noise characteristics. However, this method can merely filter out Gaussian white noise, rather than the sharp noise caused by motion interference in practice. Wavelet transformation method decomposes the original blood volume pulse signal into a series of frequency bands. The method then selects the signals limited in the heart rate band and integrates them into a desirable signal, from which heart rate can be estimated by later processes. Nevertheless, the power spectral density function of the sharp noise caused by motion interference overwhelms the entire heart rate band, which cannot be discarded thoroughly by wavelet transformation. Overall, existing non-contact heart rate detection technologies fail to effectively filter out the sharp noise caused by facial motions, and their results are inaccurate.MethodTo tackle above-mentioned problems, this work proposes a novel method for detection of facial motions. Discriminative response map fitting and Kanede-Lucas-Tomasi algorithm are used in video to detect and track the facial regions. Some facial areas (e.g., eyes) do not contain information about heart rate. Thus the face is divided into several sub-regions, and these sub-regions are then studied respectively, with the aim of mitigating the impact of uninteresting areas. Chrominance characteristics of each sub-region are extracted from the video to establish the raw blood volume pulse matrix. In theoretical aspect, the ideal blood volume pulse matrix should be low-rank. However, in practice, the expression change at a certain moment may make the blood volume pulse distorted abruptly in corresponding time. The rank of the blood volume pulse matrix increase as the result of the distortion of local elements. This work performs the adaptive signal recovery algorithm on the damaged blood volume pulse matrix to discard abnormal elements arising from facial motions and reconstruct the row-rank matrix. From the matrix, sub-regions rich in information about heart rate are selected to compose the desirable blood volume pulse signal. Heart rate can be calculated according to the power spectrum density of the desirable blood volume pulse signal.ResultAbout 211 videos from 30 subjects are recorded under natural ambient lighting condition. Three series of experiments are conducted on the proposed method to verify its validity:experiments focusing on heart rate accuracy in static scenarios and dynamic scenarios, experiments focusing on method stability with different video durations and frame rates, and a 10 minutes experiment for long-term heart rate monitoring. Results of the accuracy experiments show that the Pearson correlation coefficient between the estimated heart rate and the ground truth is 0.990 2 in static scenarios and 0.960 5 in dynamic scenarios. The heart rate estimated by the proposed method highly approximates to the ground truth. Compared with the newest method, our method decreases the error rates by 53.90% and increases the Pearson correlation coefficient by 7.46%. The stability experiments show that the proposed method performs well as long as the duration of videos is higher than 8 seconds or the frame rate is higher than 20 frames per second. The long-term heart rate monitoring shows that the heart rate measured by the proposed method has similar fluctuations with the ground truth.ConclusionThe proposed method, which is proved to be superior to state-of-the-art ones, can dispose of expression disturbances and increase the detection accuracy of heart rate. However, in cases involving sudden illumination changes, the accuracy of the proposed method fails to reach the high level, which is planned to be further improved in our future works.关键词:imaging photoplethysmography(IPPG);facial motions;heart rate detection;self-adaptive signal recovery(SSR);desirable blood volume pulse signal37|115|3更新时间:2024-05-07

Image Processing and Coding

-

摘要:ObjectiveHumans fully understand a picture, often classify different images, and understand all the information in each image, including the location and concept of the object. This task is called object detection and is one of the basic research areas in computer vision. Object detection consists of different subtasks, such as pedestrian detection and skeleton detection. Pedestrian detection is a key link in object detection and one of the difficult tasks. This study mainly investigates pedestrian detection in traffic scenes, which is one of the most valuable topics in the field of pedestrian detection. Pedestrian detection in traffic scenes has always been a key technology for intelligent video surveillance technology, unmanned technology, intelligent transportation, and other issues. In recent years, this topic has been the research focus in academic and industrial circles. With the upsurge of artificial intelligence technology development, a large number of computer vision technologies are widely used. Multi-scale pedestrian detection has great research value because the development and application of pedestrian detection has complex real scenes and different pedestrian scales. Pedestrian detection is widely used in the field of automatic driving and video surveillance and is a hot research topic. Current pedestrian detection algorithms based on deep learning have false detection and miss detection problems in the case of low resolution and small pedestrian scale. A multi-scale pedestrian detection algorithm based on multi-layer features is proposed. The proposed convolutional neural network exhibits improved accuracy of pedestrian detection by a level, and there has been no small progress in practical applications. The academic enthusiasm brought about by deep learning has enabled scholars to make great progress and breakthroughs in pedestrian detection in complex scenes. Deep learning in the future will be a major boost for pedestrian detection.MethodThe deep residual network is mainly used in the multi-objective classification field. After analyzing the network, only the feature maps of the three stages are used and the residual unit and the full connection layer of the last stage are deleted. The deep residual network is mainly used to extract the feature maps of the three stages. The feature map extracted by the last layer is doubled using the characteristics of the three feature maps and then added by the nearest neighbor sampling method. The features with rich high-level semantic information and the features with rich low-level detail information are combined to improve the detection effect. The merged three-layer features are encoded into the region proposal network, and the proposal frames with pedestrians are obtained through Softmax classification for pedestrian detection. In this work, four experiments are designed, three of which are used to verify the validity of the proposed method. Results are compared with the mainstream algorithm results. Comparative experiments indicate that simple stratification does not improve the effect and the effect of multi-layer fusion is unsatisfactory. Therefore, the method of adjacent layer fusion is selected, and the result of multi-scale pedestrian detection is directly compared with that of the deepest network. The effect of adjacent layer fusion is better than the result. All experimental results are compared, and the fusion results of the adjacent layers are the best. The rate of missed detection is lower than that of the mainstream algorithm. The network is fully convolved and consists end-to-end training through random downsampling and backpropagation. Each image contains a number of candidate boxes for positive and negative samples. However, directly taking the optimized sample will easily lead to loss bias to the negative sample because the number of negative samples is larger than that of positive samples. This study takes an image to select 256 anchors and calculates its loss. The ratio of the positive and negative samples is 1:1. This article will randomly initialize all new layers in the network, and the standard of initialization is from the zero mean standard deviation. The value set is 0.01, and the weight is taken from Gaussian distribution. The other layers are initialized by classifying the pre-trained model, and the entire training process iterates through two epochs.ResultOn the Caltech pedestrian detection dataset and under the condition that each image false alarm rate (FPPI) is 10%, the loss rate of the proposed algorithm is only 57.88%, which is decreased by 3.07% compared with the loss of one of the best models, namely, MS-CNN (multi-scale convolutional neural network) (60.95%). This work also adopts comparative experiment. The overall loss rate of Ped-RPN is 64.55%, which is worse than that of the proposed algorithm. The loss rate of the layered and then detected method (Ped-muti-RPN) is 77.15%, which is better than that of Ped-RPN method. Ped-fused-RPN is a detection algorithm that combines multiple layers. The result is 61.32%, and the effect is better than the proposed algorithm.ConclusionSmall-scale pedestrians have the disadvantage of blurred images, which make the detection effect extremely poor and affect the overall multi-scale detection. In order to solve the problem of the sharp decline of small-scale pedestrian detection, this paper proposes a method of integrating deep semantic information and shallow detail features so the features of all scales have rich semantic information. The deep features have high semantic information, and the receptive field is small. The shallow features have positional information, and the receptive field is more fused. The two features can enhance the deep features, which have rich target position information. The merged feature map has different levels of detail and semantic information and has a good effect on detecting pedestrians of different scales.关键词:target detection;pedestrian detection;feature fusion;multi-scale pedestrians;multi-layer features37|303|5更新时间:2024-05-07

摘要:ObjectiveHumans fully understand a picture, often classify different images, and understand all the information in each image, including the location and concept of the object. This task is called object detection and is one of the basic research areas in computer vision. Object detection consists of different subtasks, such as pedestrian detection and skeleton detection. Pedestrian detection is a key link in object detection and one of the difficult tasks. This study mainly investigates pedestrian detection in traffic scenes, which is one of the most valuable topics in the field of pedestrian detection. Pedestrian detection in traffic scenes has always been a key technology for intelligent video surveillance technology, unmanned technology, intelligent transportation, and other issues. In recent years, this topic has been the research focus in academic and industrial circles. With the upsurge of artificial intelligence technology development, a large number of computer vision technologies are widely used. Multi-scale pedestrian detection has great research value because the development and application of pedestrian detection has complex real scenes and different pedestrian scales. Pedestrian detection is widely used in the field of automatic driving and video surveillance and is a hot research topic. Current pedestrian detection algorithms based on deep learning have false detection and miss detection problems in the case of low resolution and small pedestrian scale. A multi-scale pedestrian detection algorithm based on multi-layer features is proposed. The proposed convolutional neural network exhibits improved accuracy of pedestrian detection by a level, and there has been no small progress in practical applications. The academic enthusiasm brought about by deep learning has enabled scholars to make great progress and breakthroughs in pedestrian detection in complex scenes. Deep learning in the future will be a major boost for pedestrian detection.MethodThe deep residual network is mainly used in the multi-objective classification field. After analyzing the network, only the feature maps of the three stages are used and the residual unit and the full connection layer of the last stage are deleted. The deep residual network is mainly used to extract the feature maps of the three stages. The feature map extracted by the last layer is doubled using the characteristics of the three feature maps and then added by the nearest neighbor sampling method. The features with rich high-level semantic information and the features with rich low-level detail information are combined to improve the detection effect. The merged three-layer features are encoded into the region proposal network, and the proposal frames with pedestrians are obtained through Softmax classification for pedestrian detection. In this work, four experiments are designed, three of which are used to verify the validity of the proposed method. Results are compared with the mainstream algorithm results. Comparative experiments indicate that simple stratification does not improve the effect and the effect of multi-layer fusion is unsatisfactory. Therefore, the method of adjacent layer fusion is selected, and the result of multi-scale pedestrian detection is directly compared with that of the deepest network. The effect of adjacent layer fusion is better than the result. All experimental results are compared, and the fusion results of the adjacent layers are the best. The rate of missed detection is lower than that of the mainstream algorithm. The network is fully convolved and consists end-to-end training through random downsampling and backpropagation. Each image contains a number of candidate boxes for positive and negative samples. However, directly taking the optimized sample will easily lead to loss bias to the negative sample because the number of negative samples is larger than that of positive samples. This study takes an image to select 256 anchors and calculates its loss. The ratio of the positive and negative samples is 1:1. This article will randomly initialize all new layers in the network, and the standard of initialization is from the zero mean standard deviation. The value set is 0.01, and the weight is taken from Gaussian distribution. The other layers are initialized by classifying the pre-trained model, and the entire training process iterates through two epochs.ResultOn the Caltech pedestrian detection dataset and under the condition that each image false alarm rate (FPPI) is 10%, the loss rate of the proposed algorithm is only 57.88%, which is decreased by 3.07% compared with the loss of one of the best models, namely, MS-CNN (multi-scale convolutional neural network) (60.95%). This work also adopts comparative experiment. The overall loss rate of Ped-RPN is 64.55%, which is worse than that of the proposed algorithm. The loss rate of the layered and then detected method (Ped-muti-RPN) is 77.15%, which is better than that of Ped-RPN method. Ped-fused-RPN is a detection algorithm that combines multiple layers. The result is 61.32%, and the effect is better than the proposed algorithm.ConclusionSmall-scale pedestrians have the disadvantage of blurred images, which make the detection effect extremely poor and affect the overall multi-scale detection. In order to solve the problem of the sharp decline of small-scale pedestrian detection, this paper proposes a method of integrating deep semantic information and shallow detail features so the features of all scales have rich semantic information. The deep features have high semantic information, and the receptive field is small. The shallow features have positional information, and the receptive field is more fused. The two features can enhance the deep features, which have rich target position information. The merged feature map has different levels of detail and semantic information and has a good effect on detecting pedestrians of different scales.关键词:target detection;pedestrian detection;feature fusion;multi-scale pedestrians;multi-layer features37|303|5更新时间:2024-05-07 -

摘要:ObjectiveThe rapid development of modern network and computer technology has led people to gradually move toward the information and intelligent era. In human pose estimation, advanced semantic interpretation and judgment results are obtained through processing, analyzing, and comprehending the input image or image sequence with computer. Human pose estimation has a wide range of applications and development prospects in human-computer interaction, surveillance, image retrieval, motion analysis, virtual reality, perception interface, etc. Thus, human pose estimation based on image is an extremely important research topic in the field of computer vision. However, the problem of human pose estimation has always been a difficult and hot topic because of the influence of the diversity of human visual appearance, occlusion, and complex background. In this paper we consider the problem of human pose estimation from a single still image. Traditional 2D human pose estimation algorithms are based on the pictorial strictures (PS) models. Solving the problem with the following is difficult:human pose estimation algorithms based on the PS model need to detect human parts in images, but in real world, detecting a single member of the human body is very difficult because of the background noise and the wide variety of human appearance. In recent years, the development of deep learning has led to new methods for human pose estimation. Compared with traditional algorithms, deep models have deeper hierarchies and ability to learn more complex patterns. In this work, we mainly focus on the effect of initial features on human joint point positioning and propose cross-stage convolutional pose machines (CSCPM).MethodFirst, the VGG network is used to obtain the preliminary initial features of the image, which is the basis of the image joint point positioning. The VGG network inherits the frameworks of LeNet and AlexNet and adopts a 19-layer deep network. The VGG network is the preferred algorithm to extract convolutional neural network (CNN) features from the images. The initial features retain more original information because the VGG network directly processes the image. Learning parameters in the deep convolutional network is difficult due to the interference of self-occlusion and mixed background. Second, on the basis of initial features, a multistage model is constructed to study the structural features at different scales. The multistage model consists of a sequence of convolutional networks that repeatedly produce 2D belief maps for the location of each part. The initial features are concatenated in each subsequent stage feature to solve the problem of gradient disappearance in initial feature learning. The network is divided into six stages. The first and second stages use the original image as input, and the third to sixth stages use the feature maps produced by the second stage as input. Finally, the joint loss function of the multi-scale joint location is designed to learn parameters in the deep convolutional network. Each stage of the cross-stage convolutional pose machines (CSCPM) effectively enforces supervision in intermediate stages through the network. Intermediate supervision has the advantage that even though the full deep learning architecture can have many layers, it does not fall prey to the vanishing gradient problem as the intermediate loss functions replenish the gradients at each stage. We encourage the network to repeatedly arrive at such representation by defining a loss function at the output of each stage that minimizes the Euclidean distance between the predicted and ideal belief maps for each part.ResultWe evaluate the proposed method on two widely used benchmarks, namely, MPⅡ (MPⅡ human pose dataset) and extended LSP (leeds sport pose) dataset, and compare the method with other human pose estimation methods in the past three years in terms of qualitative and quantitative analyses. In the experiments, percentage of corrected keypoints (PCK) measure is used to evaluate the performance of human pose estimation methods, where a key-point location is considered correct if its distance to the ground truth location is no more than a certain threshold for the length of a portion of the body. The official benchmark on the MPⅡ dataset adopts PCKh (using portion of head length as reference) at 0.5, while the official benchmark on the LSP dataset adopts PCK at 0.2. In the MPⅡ dataset, the total detection rate of the model is 89.1%, which 0.7% points higher than that of the model with the second highest performance. In the LSP dataset, the total detection rate of the model is 91.0%, which is 0.5% points higher than that of the model with the second highest performance. The qualitative results fully show the benefits of the cross-stage structure. The detection results are improved in some scenes, such as occlusion and complex background, because the concatenated initial features retain the original information.ConclusionThe human pose estimation model CSCPM is designed aiming at the failure cases of the convolutional pose machines (CPM) in some complex, scenes such as self-occlusion, mixed background, and joints of nearby people. The model provides a sequential prediction framework for the task of human pose estimation, which introduces a cross-stage structure based on the CPM model. The experimental results show that the proposed model improves the accuracy of human pose estimation and further accurately locates the points of the joins. The effectiveness of the proposed initial features learning and the benefit in the cross-stage structure are evaluated on two widely used human pose estimation benchmarks. Our approach achieves state-of-the-art performance on both datasets. The initial feature learning can effectively judge the self-occlusion and mixed background interference of the joints. The CSCPM, a human pose estimation model with cross-stage structure, is superior to existing human pose estimation models.关键词:cross-stage structure;initial features;belief maps;human pose estimation;deep learning28|5|2更新时间:2024-05-07

摘要:ObjectiveThe rapid development of modern network and computer technology has led people to gradually move toward the information and intelligent era. In human pose estimation, advanced semantic interpretation and judgment results are obtained through processing, analyzing, and comprehending the input image or image sequence with computer. Human pose estimation has a wide range of applications and development prospects in human-computer interaction, surveillance, image retrieval, motion analysis, virtual reality, perception interface, etc. Thus, human pose estimation based on image is an extremely important research topic in the field of computer vision. However, the problem of human pose estimation has always been a difficult and hot topic because of the influence of the diversity of human visual appearance, occlusion, and complex background. In this paper we consider the problem of human pose estimation from a single still image. Traditional 2D human pose estimation algorithms are based on the pictorial strictures (PS) models. Solving the problem with the following is difficult:human pose estimation algorithms based on the PS model need to detect human parts in images, but in real world, detecting a single member of the human body is very difficult because of the background noise and the wide variety of human appearance. In recent years, the development of deep learning has led to new methods for human pose estimation. Compared with traditional algorithms, deep models have deeper hierarchies and ability to learn more complex patterns. In this work, we mainly focus on the effect of initial features on human joint point positioning and propose cross-stage convolutional pose machines (CSCPM).MethodFirst, the VGG network is used to obtain the preliminary initial features of the image, which is the basis of the image joint point positioning. The VGG network inherits the frameworks of LeNet and AlexNet and adopts a 19-layer deep network. The VGG network is the preferred algorithm to extract convolutional neural network (CNN) features from the images. The initial features retain more original information because the VGG network directly processes the image. Learning parameters in the deep convolutional network is difficult due to the interference of self-occlusion and mixed background. Second, on the basis of initial features, a multistage model is constructed to study the structural features at different scales. The multistage model consists of a sequence of convolutional networks that repeatedly produce 2D belief maps for the location of each part. The initial features are concatenated in each subsequent stage feature to solve the problem of gradient disappearance in initial feature learning. The network is divided into six stages. The first and second stages use the original image as input, and the third to sixth stages use the feature maps produced by the second stage as input. Finally, the joint loss function of the multi-scale joint location is designed to learn parameters in the deep convolutional network. Each stage of the cross-stage convolutional pose machines (CSCPM) effectively enforces supervision in intermediate stages through the network. Intermediate supervision has the advantage that even though the full deep learning architecture can have many layers, it does not fall prey to the vanishing gradient problem as the intermediate loss functions replenish the gradients at each stage. We encourage the network to repeatedly arrive at such representation by defining a loss function at the output of each stage that minimizes the Euclidean distance between the predicted and ideal belief maps for each part.ResultWe evaluate the proposed method on two widely used benchmarks, namely, MPⅡ (MPⅡ human pose dataset) and extended LSP (leeds sport pose) dataset, and compare the method with other human pose estimation methods in the past three years in terms of qualitative and quantitative analyses. In the experiments, percentage of corrected keypoints (PCK) measure is used to evaluate the performance of human pose estimation methods, where a key-point location is considered correct if its distance to the ground truth location is no more than a certain threshold for the length of a portion of the body. The official benchmark on the MPⅡ dataset adopts PCKh (using portion of head length as reference) at 0.5, while the official benchmark on the LSP dataset adopts PCK at 0.2. In the MPⅡ dataset, the total detection rate of the model is 89.1%, which 0.7% points higher than that of the model with the second highest performance. In the LSP dataset, the total detection rate of the model is 91.0%, which is 0.5% points higher than that of the model with the second highest performance. The qualitative results fully show the benefits of the cross-stage structure. The detection results are improved in some scenes, such as occlusion and complex background, because the concatenated initial features retain the original information.ConclusionThe human pose estimation model CSCPM is designed aiming at the failure cases of the convolutional pose machines (CPM) in some complex, scenes such as self-occlusion, mixed background, and joints of nearby people. The model provides a sequential prediction framework for the task of human pose estimation, which introduces a cross-stage structure based on the CPM model. The experimental results show that the proposed model improves the accuracy of human pose estimation and further accurately locates the points of the joins. The effectiveness of the proposed initial features learning and the benefit in the cross-stage structure are evaluated on two widely used human pose estimation benchmarks. Our approach achieves state-of-the-art performance on both datasets. The initial feature learning can effectively judge the self-occlusion and mixed background interference of the joints. The CSCPM, a human pose estimation model with cross-stage structure, is superior to existing human pose estimation models.关键词:cross-stage structure;initial features;belief maps;human pose estimation;deep learning28|5|2更新时间:2024-05-07 -

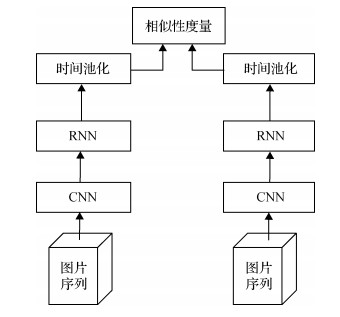

摘要:ObjectiveVideo person reidentification (re-ID) has attracted much attention due to the rapidly growing surveillance camera networks and the increasing demand of public safety. In recent years, the person reidentification task has become one of the core problems in intelligent surveillance and multimedia applications. This task aims to match the image sequences of pedestrians from non-overlapping cameras distributed at different physical locations. Given a tracklet taken from one camera, re-ID is the process of matching the person from tracklets of interest in another view. In practice, video re-ID faces several challenges. The image qualities of video frames tend to be rather low and pedestrians also exhibit a large range of pose variations because video acquisition is less constrained. Pedestrians in videos are usually moving, resulting in serious out-of-focus, blurring, and scale variations. Moreover, the same person in different videos may look different. When people move between cameras, the large appearance changes caused by environmental and geometric variations increases the difficulty of re-ID task. A lot of works has been proposed to deal with these issues. A typical video-based person re-id system first extracts the frame-wise features with deep convolutional neural networks (CNNs). The extracted features are fed into several recurrent neural networks (RNNs) to capture temporal structure information. Finally, the average or maximum temporal pooling procedure is conducted on the output RNNs to aggregate the features. However, the average pooling operation only considers the generic features of pedestrian sequences, and the specific features of samples in a sequence are neglected. While the maximum pooling operation concentrates on finding the local salient features, useful information may be abandoned. In this case, a video person re-id algorithm based on bi-directional long short-term memory (BiLSTM) and attention mechanism is proposed to make full use of temporal information and improve the robustness of person re-id systems for complex surveillance scenes.MethodFrom the input video sequence, the proposed algorithm breaks the long sequence into short snippets and randomly selects a constant number of frames for snippets. The snippets are fed into a pre-trained CNN network to extract the feature representation of each frame. In this method, the network can learn spatial appearance representation. Sequence representation is calculated by BiLSTM according to the temporal domain, which contains temporal motion information. BiLSTM in the network causes specific information to flow forward and backward in a flexible manner, allowing the underlying temporal information interaction to be fully exploited. After feature extraction, the frame-level and sequence-level features from the probe and gallery videos are fed into dot attention network independently. After calculating the correlation (the attention weight) between the sequence and its frames, the output sequence representation is reconstructed as a weighted sum of the frames at different spatial and temporal positions in the input sequence. In the attention mechanism, the network can alleviate sample noises and poor alignments in videos. Our network is implemented on the Pytorch platform and trained with a NVIDIA GTX 1080 GPU device. All training and testing images are rescaled to a fixed size of 256×128 pixels. The ResNet-50 with the pretrained parameters on ImageNet is considered the backbone network in our system. For network parameter training, we adopt stochastic gradient descent (SGD) with a momentum of 0.9. The learning rate is initially set as 0.001 and further divided by 10 after every 20 epochs. The batch size is set at 8 for training, and the total training process lasts for 40 epochs. The whole network is trained end-to-end with a joint identification and verification manner. During the test, the query and gallery videos are encoded to the feature vectors by using the aforementioned system. To compare the re-identification performance of the proposed method with the existing advanced methods, we adopt the cumulative matching characteristics (CMC) at rank-1, rank-5, rank-10, and rank-20 on all datasets.ResultThe proposed network is demonstrated on two public benchmark datasets including iLIDS-VID and PRID2011. For iLIDS-VID, the 600 video sequences of 300 persons are randomly split into 50% of persons for training and 50% of persons for testing. For PRID2011, we follow the experiment setup in previous methods and only use 400 video sequences of the first 200 persons, who appear in both cameras. The experiments on these two datasets are repeated 10 times with different test/train splits, and the results are averaged to ensure stable evaluation. Rank1 (represents the proportion of the queried people) results of the two datasets are 80.5% and 87.6% respectively. In the iLIDS-VID dataset, the Rank1 is increased by 4.5% compared with the second performance method. In the PRID2011 dataset, the Rank1 is increased by 3.9% compared with the second performance method. Extensive ablation studies verify the effectiveness of BiLSTM and attention mechanism. Compared with the results that only use LSTM in iLIDS-VID and PRID2011 datasets, the Rank1 (higher is better) is increased by 10.9% and 12.7%, respectively.ConclusionThis work proposes video person re-id method based on BiLSTM and attention mechanism. The proposed algorithm can effectively learn spatio-temporal features relevant for re-id task. Furthermore, the proposed BiLSTM allow temporal information not only to propagate from front to back but also in the reverse direction. The attention mechanism can adaptively select the discriminative information from the sequentially varying features. The proposed network significantly improves the recognition rate and has a practical application value. The proposed method shows improved robustness of video person re-id systems in complex scenes and outperforms several state-of-the-art approaches.关键词:computer vision;person re-identification;convolutional neural network (CNN);bi-directional long short-term memory(BiLSTM);attention mechanism15|4|4更新时间:2024-05-07

摘要:ObjectiveVideo person reidentification (re-ID) has attracted much attention due to the rapidly growing surveillance camera networks and the increasing demand of public safety. In recent years, the person reidentification task has become one of the core problems in intelligent surveillance and multimedia applications. This task aims to match the image sequences of pedestrians from non-overlapping cameras distributed at different physical locations. Given a tracklet taken from one camera, re-ID is the process of matching the person from tracklets of interest in another view. In practice, video re-ID faces several challenges. The image qualities of video frames tend to be rather low and pedestrians also exhibit a large range of pose variations because video acquisition is less constrained. Pedestrians in videos are usually moving, resulting in serious out-of-focus, blurring, and scale variations. Moreover, the same person in different videos may look different. When people move between cameras, the large appearance changes caused by environmental and geometric variations increases the difficulty of re-ID task. A lot of works has been proposed to deal with these issues. A typical video-based person re-id system first extracts the frame-wise features with deep convolutional neural networks (CNNs). The extracted features are fed into several recurrent neural networks (RNNs) to capture temporal structure information. Finally, the average or maximum temporal pooling procedure is conducted on the output RNNs to aggregate the features. However, the average pooling operation only considers the generic features of pedestrian sequences, and the specific features of samples in a sequence are neglected. While the maximum pooling operation concentrates on finding the local salient features, useful information may be abandoned. In this case, a video person re-id algorithm based on bi-directional long short-term memory (BiLSTM) and attention mechanism is proposed to make full use of temporal information and improve the robustness of person re-id systems for complex surveillance scenes.MethodFrom the input video sequence, the proposed algorithm breaks the long sequence into short snippets and randomly selects a constant number of frames for snippets. The snippets are fed into a pre-trained CNN network to extract the feature representation of each frame. In this method, the network can learn spatial appearance representation. Sequence representation is calculated by BiLSTM according to the temporal domain, which contains temporal motion information. BiLSTM in the network causes specific information to flow forward and backward in a flexible manner, allowing the underlying temporal information interaction to be fully exploited. After feature extraction, the frame-level and sequence-level features from the probe and gallery videos are fed into dot attention network independently. After calculating the correlation (the attention weight) between the sequence and its frames, the output sequence representation is reconstructed as a weighted sum of the frames at different spatial and temporal positions in the input sequence. In the attention mechanism, the network can alleviate sample noises and poor alignments in videos. Our network is implemented on the Pytorch platform and trained with a NVIDIA GTX 1080 GPU device. All training and testing images are rescaled to a fixed size of 256×128 pixels. The ResNet-50 with the pretrained parameters on ImageNet is considered the backbone network in our system. For network parameter training, we adopt stochastic gradient descent (SGD) with a momentum of 0.9. The learning rate is initially set as 0.001 and further divided by 10 after every 20 epochs. The batch size is set at 8 for training, and the total training process lasts for 40 epochs. The whole network is trained end-to-end with a joint identification and verification manner. During the test, the query and gallery videos are encoded to the feature vectors by using the aforementioned system. To compare the re-identification performance of the proposed method with the existing advanced methods, we adopt the cumulative matching characteristics (CMC) at rank-1, rank-5, rank-10, and rank-20 on all datasets.ResultThe proposed network is demonstrated on two public benchmark datasets including iLIDS-VID and PRID2011. For iLIDS-VID, the 600 video sequences of 300 persons are randomly split into 50% of persons for training and 50% of persons for testing. For PRID2011, we follow the experiment setup in previous methods and only use 400 video sequences of the first 200 persons, who appear in both cameras. The experiments on these two datasets are repeated 10 times with different test/train splits, and the results are averaged to ensure stable evaluation. Rank1 (represents the proportion of the queried people) results of the two datasets are 80.5% and 87.6% respectively. In the iLIDS-VID dataset, the Rank1 is increased by 4.5% compared with the second performance method. In the PRID2011 dataset, the Rank1 is increased by 3.9% compared with the second performance method. Extensive ablation studies verify the effectiveness of BiLSTM and attention mechanism. Compared with the results that only use LSTM in iLIDS-VID and PRID2011 datasets, the Rank1 (higher is better) is increased by 10.9% and 12.7%, respectively.ConclusionThis work proposes video person re-id method based on BiLSTM and attention mechanism. The proposed algorithm can effectively learn spatio-temporal features relevant for re-id task. Furthermore, the proposed BiLSTM allow temporal information not only to propagate from front to back but also in the reverse direction. The attention mechanism can adaptively select the discriminative information from the sequentially varying features. The proposed network significantly improves the recognition rate and has a practical application value. The proposed method shows improved robustness of video person re-id systems in complex scenes and outperforms several state-of-the-art approaches.关键词:computer vision;person re-identification;convolutional neural network (CNN);bi-directional long short-term memory(BiLSTM);attention mechanism15|4|4更新时间:2024-05-07 -