最新刊期

卷 23 , 期 11 , 2018

-

摘要:ObjectiveThe human brain that has evolved over a million years is perhaps the most complex and sophisticated machine in the world, carrying all the intelligent activities of human beings, such as attention, learning, memory, intuition, insight and decision making. The core of the human brain consists of billions of neurons and synapses. Each neuron "receives" information from some neurons through synapses, and then passes the processed information to other neurons through the synapse. In this way, external sensory information (i.e., visual, auditory, olfactory, taste, touch) is analyzed and processed in the brain in a complex way to form perception and cognition. Attention and memory play an important role in the cognitive process of human understanding. The development of artificial intelligence based on the memory mechanism of the brain is an advanced aspect of research. Given that "end-to-end" deep learning enables an excellent performance in tasks, such as recognition and classification, introducing attention mechanism and external memory in the deep learning model to mine information of interest in data and effectively use auxiliary information is a popular research area in artificial intelligence.MethodThis report focuses on the external memory and attention mechanism of the brain. Firstly, three representative works, namely, neural turing machine, memory networks, and differentiable neural computer, are introduced. Neural turing machine is analogous to a Turing Machine or Von Neumann architecture but is differentiable end-to-end, allowing it to be efficiently trained with gradient descent. Memory networks can reason with inference components combined with a long-term memory component and they learn how to use these components jointly. Differentiable neural computer, which consists of a neural network that can read from and write to an external memory matrix, analogous to the random-access memory in a conventional computer. Secondly, several specific applications, such as knowledge memory network for question answering, memory-driven movie question answering, and memory-driven creativity (text-to-image), are presented. For answering the factoid questions, this report present the temporality-enhanced knowledge memory network (TE-KMN), which encodes not only the content of questions and answers, but also the temporal cues in a sequence of ordered sentences that gradually remark the answer. Moreover, TE-KMN collaboratively uses external knowledge for a better understanding of a given question. For answering questions about movies, the layered memory network (LMN) that represents frame-level and clip-level movie content by the static word memory module and the dynamic subtitle memory module respectively, is introduced. To generate images depending on their corresponding narrative sentences, this report presents the visual-memory Creative Adversarial Network (vmCAN), which appropriately leverages an external visual knowledge memory in both multi-modal fusion and image synthesis. Finally, research progress of memory networks at home and abroad is compared.ResultResearch results show that 1) introducing attention mechanism and external memory structure in the deep learning model is a current hotspot in artificial intelligence research. 2) Research that focuses on memory networks at home and abroad has been intensified, and literature related to machine learning and artificial intelligence has been published at top conferences and has been increasing annually. 3) Research on memory networks is gaining popularity. An increasing number of papers have been published yearly, and this trend has been constantly growing. Thus far, 9, 4, 9, and 14 articles have been published from 2015 to 2018, respectively. 4) Memory-driven methods and approaches are general, and memory networks have been successfully used in areas, such as question answering, visual question answering, object detection, reinforcement learning, and text-to-images.ConclusionThis report shows a future work on media learning and creativity. The next generation of artificial intelligence should be never-ending learning from data, experience, and automatic reasoning. In the future, artificial intelligence should be integrated organically with human knowledge through methods such as attention mechanism, memory network, transfer learning, and reinforcement learning, so as to achieve from shallow computing to deep reasoning, from simple data-driven to data-driven combined with logic rules, from vertical domain intelligence to more general artificial intelligence.关键词:multimedia;memory network;memory augmented;knowledge augmented;media learning;media creativity14|18|1更新时间:2024-05-07

摘要:ObjectiveThe human brain that has evolved over a million years is perhaps the most complex and sophisticated machine in the world, carrying all the intelligent activities of human beings, such as attention, learning, memory, intuition, insight and decision making. The core of the human brain consists of billions of neurons and synapses. Each neuron "receives" information from some neurons through synapses, and then passes the processed information to other neurons through the synapse. In this way, external sensory information (i.e., visual, auditory, olfactory, taste, touch) is analyzed and processed in the brain in a complex way to form perception and cognition. Attention and memory play an important role in the cognitive process of human understanding. The development of artificial intelligence based on the memory mechanism of the brain is an advanced aspect of research. Given that "end-to-end" deep learning enables an excellent performance in tasks, such as recognition and classification, introducing attention mechanism and external memory in the deep learning model to mine information of interest in data and effectively use auxiliary information is a popular research area in artificial intelligence.MethodThis report focuses on the external memory and attention mechanism of the brain. Firstly, three representative works, namely, neural turing machine, memory networks, and differentiable neural computer, are introduced. Neural turing machine is analogous to a Turing Machine or Von Neumann architecture but is differentiable end-to-end, allowing it to be efficiently trained with gradient descent. Memory networks can reason with inference components combined with a long-term memory component and they learn how to use these components jointly. Differentiable neural computer, which consists of a neural network that can read from and write to an external memory matrix, analogous to the random-access memory in a conventional computer. Secondly, several specific applications, such as knowledge memory network for question answering, memory-driven movie question answering, and memory-driven creativity (text-to-image), are presented. For answering the factoid questions, this report present the temporality-enhanced knowledge memory network (TE-KMN), which encodes not only the content of questions and answers, but also the temporal cues in a sequence of ordered sentences that gradually remark the answer. Moreover, TE-KMN collaboratively uses external knowledge for a better understanding of a given question. For answering questions about movies, the layered memory network (LMN) that represents frame-level and clip-level movie content by the static word memory module and the dynamic subtitle memory module respectively, is introduced. To generate images depending on their corresponding narrative sentences, this report presents the visual-memory Creative Adversarial Network (vmCAN), which appropriately leverages an external visual knowledge memory in both multi-modal fusion and image synthesis. Finally, research progress of memory networks at home and abroad is compared.ResultResearch results show that 1) introducing attention mechanism and external memory structure in the deep learning model is a current hotspot in artificial intelligence research. 2) Research that focuses on memory networks at home and abroad has been intensified, and literature related to machine learning and artificial intelligence has been published at top conferences and has been increasing annually. 3) Research on memory networks is gaining popularity. An increasing number of papers have been published yearly, and this trend has been constantly growing. Thus far, 9, 4, 9, and 14 articles have been published from 2015 to 2018, respectively. 4) Memory-driven methods and approaches are general, and memory networks have been successfully used in areas, such as question answering, visual question answering, object detection, reinforcement learning, and text-to-images.ConclusionThis report shows a future work on media learning and creativity. The next generation of artificial intelligence should be never-ending learning from data, experience, and automatic reasoning. In the future, artificial intelligence should be integrated organically with human knowledge through methods such as attention mechanism, memory network, transfer learning, and reinforcement learning, so as to achieve from shallow computing to deep reasoning, from simple data-driven to data-driven combined with logic rules, from vertical domain intelligence to more general artificial intelligence.关键词:multimedia;memory network;memory augmented;knowledge augmented;media learning;media creativity14|18|1更新时间:2024-05-07 -

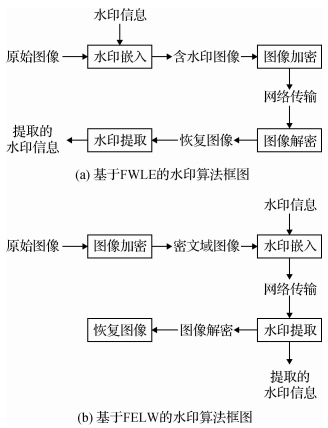

摘要:ObjectiveThe rapid evolution of cloud technology has provided users with convenience but still has security risks. Users' privacy (e.g., personal photos, corporate user information, and electronic notes) will be exposed once the cloud data are inaccessible or corrupted. Owing to the existence of security vulnerabilities or internal staff illicit activity, data may be tampered, replaced, or distributed illegally. In such a case, protecting the user' privacy is crucial. Currently and in practice, protecting the users' privacy is achieved by watermarking technology, especially reversible watermarking technology. Reversible watermarking can not only extract data correctly but also completely restore the original carrier. This technique is used in the medical, military, and other fields. Therefore, reversible watermarking technology plays an important role in the privacy protection field. Most of the existing reversible watermarking algorithms are based on the plaintext domain, such that they can be easily pirated or tampered. Thus, we proposed a reversible watermarking scheme in the homomorphic encrypted domain on the basis of Gray code for piracy tracing to enhance security and protect privacy. The proposed scheme provides support for a direct operation in the homomorphic encrypted domain. The ultimate goal of the new proposed scheme in the encrypted domain is to protect the users' privacy.MethodFirst, the "homomorphic encryption system based on Gray code" (HESGC) is proposed to encrypt the original carrier image. Gray code encryption converts the grayscale values of the original carrier image into binary values. Second, in accordance with integer homomorphic encryption, the binary values are converted into different decimal grayscale values. Subsequently, region division and classification can be reasonably performed in accordance with the "integer wavelet transform" and "human visual system" characteristics. Region division is used to avoid conflicts between watermarking and tracing proofs, and region classification fits well with human visual characteristics. Humans are sensitive to smooth regions and minimally sensitive to the textured regions. Moreover, neighboring quadratic optimization approach is presented to improve the concentration of the same regions and enhance the accuracy and reasonability of region classification. Third, we implement the proposed embedding, reversible recovery, and extraction operations. Finally, we present "joint watermarking and tracing" (JWT) strategy and utilize it to achieve piracy tracing. The JWT strategy can trace piracy and the first unauthorized person who illegally copies or distributes the image. This study uses the nonrepudiation of tracing proofs.ResultExperiments on the commonly used image database (i.e., USC-SIPI) are conducted. Six classical images from the USC-SIPI image database are selected for this experiment. The proposed algorithm has higher peak signal-to-noise ratio (PSNR) values than the existing reversible watermarking algorithms, and the PSNR value can reach 50 dB. In addition, the structural similarity index metric values of the original carrier, restored, original watermarking, and extracted watermarking images are all equal to 1. The proposed scheme achieves a reversible recovery. Furthermore, the proposed HESGC expands the original carrier image by eight times, thus increasing the capacity. Theoretically, the maximum capacity of the algorithm proposed in this study is 3.75 bit/pixel. Currently, the maximum capacity of most existing reversible watermarking algorithms is less than 1 bit/pixel. Moreover, the proposed scheme can not only achieve piracy tracing but also resist several common attacks, such as random noise, median filter, image smoothing, JPEG-coded, LZW-coded, and convolutional fuzzy attacks. We calculate the similarity value of the extracted and generated tracing proofs by the image copyright owner and determine who has the maximum similarity value to identify the piracy origin. If the similarity value is approximately 1, then it is a pirate. For other non-pirates, the similarity value is less than 1, most of which are approximately 0.6. Experimental results confirm the efficiency of the proposed scheme.ConclusionIn this study, we present the HESGC and JWT for the first time to achieve piracy tracing and reversible watermarking in an encrypted image. Most of the existing reversible watermarking algorithms directly use a binary sequence as the watermark. Alternatively, the gray image in this scheme serves as the watermark image directly; the restrictions when the binary image is used as the watermark image are removed, or the gray image is binarized as the watermark image. Moreover, cascade chaotic technology is adopted to encrypt the gray watermark image to enhance its security. We also successfully eliminate the smooth/textured islands in the textured/smooth regions, such that the block classification results are accurate and reasonable. In particular, security is an important measurement indicator for privacy protection, where only a secure watermarking system is meaningful. This study examines a triple security protection mechanism, which has the strongest security performance. The experimental results indicate that this scheme not only achieves privacy protection and piracy tracing but also has the characteristics of high security, large capacity, and high restoration quality. Moreover, this scheme can resist several common attacks, which are suitable for protecting users' privacy. The proposed algorithm focuses on the reversible watermarking technology in an encrypted image and is widely applied to digital images, such as military images, medical images, electronic invoice, and legal documents, that require high confidentiality, security, and fidelity.关键词:privacy protection;reversible watermarking;piracy tracing;HESGC(homomorphic encryption system based on gray code);JWT(joint watermarking and tracing);high capacity;high security17|4|1更新时间:2024-05-07

摘要:ObjectiveThe rapid evolution of cloud technology has provided users with convenience but still has security risks. Users' privacy (e.g., personal photos, corporate user information, and electronic notes) will be exposed once the cloud data are inaccessible or corrupted. Owing to the existence of security vulnerabilities or internal staff illicit activity, data may be tampered, replaced, or distributed illegally. In such a case, protecting the user' privacy is crucial. Currently and in practice, protecting the users' privacy is achieved by watermarking technology, especially reversible watermarking technology. Reversible watermarking can not only extract data correctly but also completely restore the original carrier. This technique is used in the medical, military, and other fields. Therefore, reversible watermarking technology plays an important role in the privacy protection field. Most of the existing reversible watermarking algorithms are based on the plaintext domain, such that they can be easily pirated or tampered. Thus, we proposed a reversible watermarking scheme in the homomorphic encrypted domain on the basis of Gray code for piracy tracing to enhance security and protect privacy. The proposed scheme provides support for a direct operation in the homomorphic encrypted domain. The ultimate goal of the new proposed scheme in the encrypted domain is to protect the users' privacy.MethodFirst, the "homomorphic encryption system based on Gray code" (HESGC) is proposed to encrypt the original carrier image. Gray code encryption converts the grayscale values of the original carrier image into binary values. Second, in accordance with integer homomorphic encryption, the binary values are converted into different decimal grayscale values. Subsequently, region division and classification can be reasonably performed in accordance with the "integer wavelet transform" and "human visual system" characteristics. Region division is used to avoid conflicts between watermarking and tracing proofs, and region classification fits well with human visual characteristics. Humans are sensitive to smooth regions and minimally sensitive to the textured regions. Moreover, neighboring quadratic optimization approach is presented to improve the concentration of the same regions and enhance the accuracy and reasonability of region classification. Third, we implement the proposed embedding, reversible recovery, and extraction operations. Finally, we present "joint watermarking and tracing" (JWT) strategy and utilize it to achieve piracy tracing. The JWT strategy can trace piracy and the first unauthorized person who illegally copies or distributes the image. This study uses the nonrepudiation of tracing proofs.ResultExperiments on the commonly used image database (i.e., USC-SIPI) are conducted. Six classical images from the USC-SIPI image database are selected for this experiment. The proposed algorithm has higher peak signal-to-noise ratio (PSNR) values than the existing reversible watermarking algorithms, and the PSNR value can reach 50 dB. In addition, the structural similarity index metric values of the original carrier, restored, original watermarking, and extracted watermarking images are all equal to 1. The proposed scheme achieves a reversible recovery. Furthermore, the proposed HESGC expands the original carrier image by eight times, thus increasing the capacity. Theoretically, the maximum capacity of the algorithm proposed in this study is 3.75 bit/pixel. Currently, the maximum capacity of most existing reversible watermarking algorithms is less than 1 bit/pixel. Moreover, the proposed scheme can not only achieve piracy tracing but also resist several common attacks, such as random noise, median filter, image smoothing, JPEG-coded, LZW-coded, and convolutional fuzzy attacks. We calculate the similarity value of the extracted and generated tracing proofs by the image copyright owner and determine who has the maximum similarity value to identify the piracy origin. If the similarity value is approximately 1, then it is a pirate. For other non-pirates, the similarity value is less than 1, most of which are approximately 0.6. Experimental results confirm the efficiency of the proposed scheme.ConclusionIn this study, we present the HESGC and JWT for the first time to achieve piracy tracing and reversible watermarking in an encrypted image. Most of the existing reversible watermarking algorithms directly use a binary sequence as the watermark. Alternatively, the gray image in this scheme serves as the watermark image directly; the restrictions when the binary image is used as the watermark image are removed, or the gray image is binarized as the watermark image. Moreover, cascade chaotic technology is adopted to encrypt the gray watermark image to enhance its security. We also successfully eliminate the smooth/textured islands in the textured/smooth regions, such that the block classification results are accurate and reasonable. In particular, security is an important measurement indicator for privacy protection, where only a secure watermarking system is meaningful. This study examines a triple security protection mechanism, which has the strongest security performance. The experimental results indicate that this scheme not only achieves privacy protection and piracy tracing but also has the characteristics of high security, large capacity, and high restoration quality. Moreover, this scheme can resist several common attacks, which are suitable for protecting users' privacy. The proposed algorithm focuses on the reversible watermarking technology in an encrypted image and is widely applied to digital images, such as military images, medical images, electronic invoice, and legal documents, that require high confidentiality, security, and fidelity.关键词:privacy protection;reversible watermarking;piracy tracing;HESGC(homomorphic encryption system based on gray code);JWT(joint watermarking and tracing);high capacity;high security17|4|1更新时间:2024-05-07 -

摘要:ObjectiveMost traditional methods used to merge sequential multi-exposure low dynamic range (LDR) images into a high dynamic range (HDR) image are sensitive to certain problems, such as noise and object motion, and must address large-scale data, which hinder the application and further development of HDR image acquisition technology. Low-rank matrix recovery can extract an aligned low-rank image with linear correlation from a sparse noise-corrupted data matrix. A new method that exploits the abovementioned feature based on the low-rank matrix recovery is proposed to merge sequential multi-exposure LDR images into an HDR image and improve the anti-noise and de-artifact performances in capturing HDR images.MethodFirst, the sequential multi-exposure LDR images are inputted and mapped to the linear luminance space by a calibrated camera response function (CRF). Second, a partial sum of singular values (PSSV) is used as an optimization objective function to build a low-rank matrix mathematical model for HDR image fusion method, which is used to merge the captured sequential multi-exposure LDR images. With the help of the proposed method, the data matrix is decomposed into low-rank and sparse matrices through the exact augmented Lagrange multiplier method, where the PSSV is the objective function. This algorithm is optimized given the motivation for an alternating direction multiplier method. An adaptive penalty factor is set to address different singular values. If a singular value tends to 0, then the algorithm will update the low-rank and sparse matrices with a new partial singular value thresholding (PSVT); otherwise, the algorithm will update the low-rank and sparse matrices with the classical PSVT. Moreover, the augmented Lagrange multiplier and penalty factor are updated simultaneously. The algorithm will terminate when the optimal solution concentrates within the space of the maximum singular value as much as possible after a finite number of iteration steps. Thus, a low-rank matrix with the light information of an entire scene, where the noises and artifacts are eliminated, is obtained. This obtained low-rank matrix is also the final merged HDR image from the captured sequential multi-exposure LDR images.ResultThe convergence and anti-noise performance are first evaluated. The proposed method and two other comparison methods are applied to the randomly generated data matrices with a size of 10 000 ×50 pixels and rank from 1 to 4. Simultaneously, a sparse noise is added to each data matrix with a ratio from 0.1 to 0.4. The comparison methods refer to robust principal component analysis (RPCA) and the PSSV. Simulation results indicate that the proposed method has better convergence and anti-noise performance than the two other comparison methods. The experimental results of various matrices with different ranks and sparse noise ratios show that the proposed method achieves low normalized mean square and solution errors. Furthermore, the proposed algorithm guarantees that the rank of the result is sufficiently lower than the original matrix. Thus, the singular value of the main information will not be considerably attenuated. This finding indicates that the new method can obtain low-rank results even when the reconstruction error is low. The performance of HDR image fusion is evaluated by analyzing the values of peak signal-to-noise ratio (PSNR) and structural similarity index metric based on perceptually uniform mapping. The experiments run with the classical sequential multi-exposure LDR images, such as memorial church and arch, to acquire the HDR images. The experimental results show that the expectation is achieved. The proposed method can eliminate the artifacts in dynamic scenes with sparse noise and improve the quality of the fused HDR images compared with the recovering high dynamic range radiance maps from photographs (RHDRRMP), RPCA, and PSSV algorithms. The RHDRRMP method cannot suppress the sparse noise and artifacts and produces poor brightness and contrast. The RPCA method cannot suppress artifacts well, and missing details and even inaccurate results have emerged. The PSSV method can obtain better results but fewer details than the proposed method. The index metrics of the PSNR and SSIM of the results obtained through the proposed method from the objective indicators are higher than those of the comparison algorithms. For the memorial church sequence without noise, the PSNR and SSIM of the RPCA method are 28.117 dB and 0.935, respectively; those of the PSSV method are 30.557 dB and 0.959, correspondingly; and those of our method are 32.550 dB and 0.968, respectively. The PSNR and SSIM of the RPCA method are 28.115 dB and 0.935, correspondingly; those of the PSSV method are 30.579 dB and 0.959, respectively; and those of the proposed method are 32.562 dB and 0.967, correspondingly. The proposed algorithm can recover the low-rank matrix to obtain the HDR image, even with few images in the multi-exposure image sequence. In this situation, the RPCA method cannot obtain the optimal solution to the low-rank matrix. The PSSV method only ensures that the variance of the singular value vectors in the data, rather than the low-rank data, is not the largest and cannot guarantee that the low-rank data have the maximum variance on the singular value vector. Overall, the results show that the proposed algorithm has better robustness than the traditional fusion methods.ConclusionIn this study, a new method based on low-rank matrix recovery optimization theory is proposed. The proposed method can merge sequential multi-exposure LDR images into an HDR image. With the help of the proposed method, the HDR image can be obtained with a low reconstruction error in the case of few datasets, and the interference of the noise and artifacts can be removed in a dynamic scene. Thus, the proposed method has better robustness than the traditional experimental methods. The demand for high-quality images can be satisfied by improving HDR images. However, the proposed method depends on the CRF, that is, an accurate CRF indicates an improved quality of the result of image fusion. The proposed method also requires the aligned sequential multi-exposure LDR images to further eliminate the serious problems of image displacement or high-speed moving objects in a scene. Otherwise, the ghost and blur phenomena will affect the fused HDR image.关键词:image fusion;high dynamic range image;low-rank matrix recovery;de-ghosting;Lagrange multiplier55|225|2更新时间:2024-05-07

摘要:ObjectiveMost traditional methods used to merge sequential multi-exposure low dynamic range (LDR) images into a high dynamic range (HDR) image are sensitive to certain problems, such as noise and object motion, and must address large-scale data, which hinder the application and further development of HDR image acquisition technology. Low-rank matrix recovery can extract an aligned low-rank image with linear correlation from a sparse noise-corrupted data matrix. A new method that exploits the abovementioned feature based on the low-rank matrix recovery is proposed to merge sequential multi-exposure LDR images into an HDR image and improve the anti-noise and de-artifact performances in capturing HDR images.MethodFirst, the sequential multi-exposure LDR images are inputted and mapped to the linear luminance space by a calibrated camera response function (CRF). Second, a partial sum of singular values (PSSV) is used as an optimization objective function to build a low-rank matrix mathematical model for HDR image fusion method, which is used to merge the captured sequential multi-exposure LDR images. With the help of the proposed method, the data matrix is decomposed into low-rank and sparse matrices through the exact augmented Lagrange multiplier method, where the PSSV is the objective function. This algorithm is optimized given the motivation for an alternating direction multiplier method. An adaptive penalty factor is set to address different singular values. If a singular value tends to 0, then the algorithm will update the low-rank and sparse matrices with a new partial singular value thresholding (PSVT); otherwise, the algorithm will update the low-rank and sparse matrices with the classical PSVT. Moreover, the augmented Lagrange multiplier and penalty factor are updated simultaneously. The algorithm will terminate when the optimal solution concentrates within the space of the maximum singular value as much as possible after a finite number of iteration steps. Thus, a low-rank matrix with the light information of an entire scene, where the noises and artifacts are eliminated, is obtained. This obtained low-rank matrix is also the final merged HDR image from the captured sequential multi-exposure LDR images.ResultThe convergence and anti-noise performance are first evaluated. The proposed method and two other comparison methods are applied to the randomly generated data matrices with a size of 10 000 ×50 pixels and rank from 1 to 4. Simultaneously, a sparse noise is added to each data matrix with a ratio from 0.1 to 0.4. The comparison methods refer to robust principal component analysis (RPCA) and the PSSV. Simulation results indicate that the proposed method has better convergence and anti-noise performance than the two other comparison methods. The experimental results of various matrices with different ranks and sparse noise ratios show that the proposed method achieves low normalized mean square and solution errors. Furthermore, the proposed algorithm guarantees that the rank of the result is sufficiently lower than the original matrix. Thus, the singular value of the main information will not be considerably attenuated. This finding indicates that the new method can obtain low-rank results even when the reconstruction error is low. The performance of HDR image fusion is evaluated by analyzing the values of peak signal-to-noise ratio (PSNR) and structural similarity index metric based on perceptually uniform mapping. The experiments run with the classical sequential multi-exposure LDR images, such as memorial church and arch, to acquire the HDR images. The experimental results show that the expectation is achieved. The proposed method can eliminate the artifacts in dynamic scenes with sparse noise and improve the quality of the fused HDR images compared with the recovering high dynamic range radiance maps from photographs (RHDRRMP), RPCA, and PSSV algorithms. The RHDRRMP method cannot suppress the sparse noise and artifacts and produces poor brightness and contrast. The RPCA method cannot suppress artifacts well, and missing details and even inaccurate results have emerged. The PSSV method can obtain better results but fewer details than the proposed method. The index metrics of the PSNR and SSIM of the results obtained through the proposed method from the objective indicators are higher than those of the comparison algorithms. For the memorial church sequence without noise, the PSNR and SSIM of the RPCA method are 28.117 dB and 0.935, respectively; those of the PSSV method are 30.557 dB and 0.959, correspondingly; and those of our method are 32.550 dB and 0.968, respectively. The PSNR and SSIM of the RPCA method are 28.115 dB and 0.935, correspondingly; those of the PSSV method are 30.579 dB and 0.959, respectively; and those of the proposed method are 32.562 dB and 0.967, correspondingly. The proposed algorithm can recover the low-rank matrix to obtain the HDR image, even with few images in the multi-exposure image sequence. In this situation, the RPCA method cannot obtain the optimal solution to the low-rank matrix. The PSSV method only ensures that the variance of the singular value vectors in the data, rather than the low-rank data, is not the largest and cannot guarantee that the low-rank data have the maximum variance on the singular value vector. Overall, the results show that the proposed algorithm has better robustness than the traditional fusion methods.ConclusionIn this study, a new method based on low-rank matrix recovery optimization theory is proposed. The proposed method can merge sequential multi-exposure LDR images into an HDR image. With the help of the proposed method, the HDR image can be obtained with a low reconstruction error in the case of few datasets, and the interference of the noise and artifacts can be removed in a dynamic scene. Thus, the proposed method has better robustness than the traditional experimental methods. The demand for high-quality images can be satisfied by improving HDR images. However, the proposed method depends on the CRF, that is, an accurate CRF indicates an improved quality of the result of image fusion. The proposed method also requires the aligned sequential multi-exposure LDR images to further eliminate the serious problems of image displacement or high-speed moving objects in a scene. Otherwise, the ghost and blur phenomena will affect the fused HDR image.关键词:image fusion;high dynamic range image;low-rank matrix recovery;de-ghosting;Lagrange multiplier55|225|2更新时间:2024-05-07 -

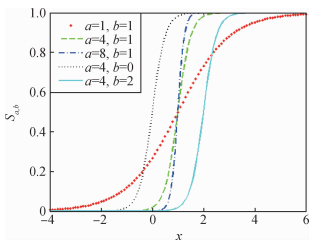

摘要:ObjectiveTexture is a repetitive pattern with high pixel values. Many natural images and works of art include textures such as cross-stitch and mosaic. In many cases, the visual system of individuals ignores the texture pattern and focuses on the main structure of an image. Texture filtering is a basic tool in the computer graphics and image processing fields; the goal of which is to suppress unnecessary texture details and maintain the salient structure in the image. In recent years, various texture filtering methods, which are mainly divided into global-and local-based filtering methods, have been proposed. Most of the existing texture filtering methods handle the small gradient texture images. However, handling the strong gradient texture and losing part of the structure is difficult. To solve this problem, we propose a texture filtering method by using texture gradient suppression and

摘要:ObjectiveTexture is a repetitive pattern with high pixel values. Many natural images and works of art include textures such as cross-stitch and mosaic. In many cases, the visual system of individuals ignores the texture pattern and focuses on the main structure of an image. Texture filtering is a basic tool in the computer graphics and image processing fields; the goal of which is to suppress unnecessary texture details and maintain the salient structure in the image. In recent years, various texture filtering methods, which are mainly divided into global-and local-based filtering methods, have been proposed. Most of the existing texture filtering methods handle the small gradient texture images. However, handling the strong gradient texture and losing part of the structure is difficult. To solve this problem, we propose a texture filtering method by using texture gradient suppression and${L_0}$ ${L_0}$ ${L_0}$ $\lambda $ $\lambda $ ${L_0}$ $\lambda $ $\lambda $ ${L_0}$ ${L_0}$ 关键词:texture filtering;${L_0}$ 15|5|1更新时间:2024-05-07

Image Processing and Cording

-

摘要:Objective Object detection has been a popular research topic in the field of computer vision and is an essential component for security video surveillance system and other computer vision applications. Image recognition, which is based on convolutional neural network, has fulfilled remarkable achievements. Many current object detection pipelines due to the deep learning can be divided into three stages as follows:1) extracts region proposals, 2) classifies and refines each region proposal, and 3) removes extra detection boxes that might belong to the same object. Non-maximum suppression (NMS) algorithm is frequently used in Stage 3 as an essential part of object detection and obtains impressive effect. Numerous studies have focused on feature design, classifier design, and object proposals, although the NMS algorithm is a core part of object detection. Few studies on the NMS algorithms exist. The NMS algorithm is used as a post-processing step of object detection to remove the redundant detection boxes. However, this algorithm suppresses all detection boxes with higher intersection-over-union (IoU) overlap than the threshold with pre-selected detection box. NMS algorithm may remove the positive detection box if the positive detection box is adjacent to the pre-selected with a high IoU value. It may also preserve the negative detection box because this box with the pre-selected detection box has a low IoU value. Mean average precision (mAP) decreases as a result of the missing and false positives; thus, the traditional NMS can also be called GreedyNMS. GreedyNMS easily causes missed and false detections.Method To overcome these shortages, an improved NMS algorithm is proposed in accordance with the different IoU values to assign a proportional penalty coefficient to reduce detection scores. The improved NMS algorithm includes the piecewise and the continuous proportional penalty factor NMS algorithms. The piecewise proportional penalty factor NMS algorithm reduces the scores of detection boxes and has a higher IoU than threshold T. The detection boxes with IoU, which is less than the threshold T, maintains its original score. The detection boxes whose scores are lower than another threshold σ are removed after many iterations. The performance of this algorithm remains limited by the threshold T. The continuous proportional penalty factor NMS algorithm no longer uses threshold T but directly reduces all detection boxes, except those with the maximum score in each iteration. In the continuous proportional penalty factor NMS algorithm, the threshold slightly affects the performance of the algorithm. The improved NMS algorithm initially calculates the proportional penalty factors the correspond to the detection boxes in accordance with the IoU value of the pre-selection detection box. The improved NMS algorithm multiplies the confidence scores of the detection boxes by the proportional penalty factors and reduces the detection box scores through the proportional penalty factor after many iterations. Moreover, the improved NMS algorithm removes the detection boxes with a score below the threshold after many iterations. The piecewise and the continuous proportional penalty factor NMS algorithms are used in each iteration in a post-processing step of object detection rather than in a region proposal network. The threshold in the continuous proportional penalty factor is less sensitive to the performance of the algorithm than the influence of the threshold in GreedyNMS. In addition, the computational complexity of the improved NMS algorithm is O(n2), which is the same as that of GreedyNMS, where

摘要:Objective Object detection has been a popular research topic in the field of computer vision and is an essential component for security video surveillance system and other computer vision applications. Image recognition, which is based on convolutional neural network, has fulfilled remarkable achievements. Many current object detection pipelines due to the deep learning can be divided into three stages as follows:1) extracts region proposals, 2) classifies and refines each region proposal, and 3) removes extra detection boxes that might belong to the same object. Non-maximum suppression (NMS) algorithm is frequently used in Stage 3 as an essential part of object detection and obtains impressive effect. Numerous studies have focused on feature design, classifier design, and object proposals, although the NMS algorithm is a core part of object detection. Few studies on the NMS algorithms exist. The NMS algorithm is used as a post-processing step of object detection to remove the redundant detection boxes. However, this algorithm suppresses all detection boxes with higher intersection-over-union (IoU) overlap than the threshold with pre-selected detection box. NMS algorithm may remove the positive detection box if the positive detection box is adjacent to the pre-selected with a high IoU value. It may also preserve the negative detection box because this box with the pre-selected detection box has a low IoU value. Mean average precision (mAP) decreases as a result of the missing and false positives; thus, the traditional NMS can also be called GreedyNMS. GreedyNMS easily causes missed and false detections.Method To overcome these shortages, an improved NMS algorithm is proposed in accordance with the different IoU values to assign a proportional penalty coefficient to reduce detection scores. The improved NMS algorithm includes the piecewise and the continuous proportional penalty factor NMS algorithms. The piecewise proportional penalty factor NMS algorithm reduces the scores of detection boxes and has a higher IoU than threshold T. The detection boxes with IoU, which is less than the threshold T, maintains its original score. The detection boxes whose scores are lower than another threshold σ are removed after many iterations. The performance of this algorithm remains limited by the threshold T. The continuous proportional penalty factor NMS algorithm no longer uses threshold T but directly reduces all detection boxes, except those with the maximum score in each iteration. In the continuous proportional penalty factor NMS algorithm, the threshold slightly affects the performance of the algorithm. The improved NMS algorithm initially calculates the proportional penalty factors the correspond to the detection boxes in accordance with the IoU value of the pre-selection detection box. The improved NMS algorithm multiplies the confidence scores of the detection boxes by the proportional penalty factors and reduces the detection box scores through the proportional penalty factor after many iterations. Moreover, the improved NMS algorithm removes the detection boxes with a score below the threshold after many iterations. The piecewise and the continuous proportional penalty factor NMS algorithms are used in each iteration in a post-processing step of object detection rather than in a region proposal network. The threshold in the continuous proportional penalty factor is less sensitive to the performance of the algorithm than the influence of the threshold in GreedyNMS. In addition, the computational complexity of the improved NMS algorithm is O(n2), which is the same as that of GreedyNMS, where$ n$ 关键词:object detection;non-maximum suppression algorithm;detection boxes;scale factor;false positives18|41|19更新时间:2024-05-07

Image Analysis and Recognition

-

摘要:Objective Visual tracking is widely applied in fields, such as video surveillance, human-computer interaction, and intelligent transportation, at present. In recent years, domestic and foreign researchers have proposed numerous tracking algorithms for this purpose. When applied to practical use, these algorithms are required to track a target extensively. However, continuously tracking a target is difficult for most algorithms given the complexity of the tracking scenario. Therefore, conducting rapid and robust tracking of a target is a key issue that must be solved when applying visual target tracking technology to practical use. TLD algorithm provides an effective solution to this issue. This study improves two aspects of the TLD algorithm to improve its tracking performance.Method First, a scale adaptive kernel correlation filter (KCF) is used as a tracker in the tracking module. The KCF algorithm cannot adapt to the scale change of the target because the size of the filter template is fixed. However, the detection module of the TLD algorithm has a certain scale adaptability. Therefore, the proposed algorithm utilizes the scale adaptive capabilities of the detection module to measure the scale of the region of interest of the KCF tracker. Moreover, the scale adjustments can enable the KCF tracker to achieve an improved tracking precision. The algorithm uses the detection module to assess the accuracy of the results of the tracking, module and selectively updates the KCF filter template in accordance with the assessed result because the tracking and detection modules are independent of each other. Second, an optical flow method in the detection module is used to preliminarily predict a target position. The optical flow method is used to estimate the target movement between two adjacent frames without any prior knowledge. The target detection area is set in accordance with the predicted position, and the size of the detection area is proportional to the target size. A three-layer cascade classifier is used to locate the target accurately after dynamically adjusting the target detection area. An anti-interference capability of the algorithm to similar objects in the scene is enhanced since the target motion information is introduced.ResultTwo sets of experiments are conducted to verify the superiority of the proposed algorithm. The first set of experiments is conducted on the OTB2013 and Temple Color 128 data platforms. The OTB2013 data platform has 50 sets of video sequences, and the Temple Color 128 data platform has 128 sets of video sequences. Results show that the tracking accuracy and success rate of the algorithm on the OTB2013 data platform are 0.761 and 0.559, respectively, and the tracking accuracy and success rate of the algorithm on the Temple Color 128 data platform are 0.678 and 0.481, correspondingly. The proposed algorithm is compared with six state-of-the art algorithms, namely, DSST, KCF, CNT, Struck, TLD, and DLT. Among all the algorithms, the proposed algorithm exhibits the optimum performance on the two data platforms, Besides, the. The average tracking speed of all test videos reaches 27.92 frame/s, thereby indicating a favorable real-time performance. In another set of experiments, the proposed algorithm and three other improved algorithms are tested and compared with the randomly selected eight sets of video sequences. The experimental results show that the proposed algorithm has the smallest center position error of 14.01, the largest overlap rate of 72.2%, and the fastest tracking speed of 26.23 frame/s, thus denoting that the proposed algorithm achieves the optimum tracking performance among all of the improved algorithms.Conclusion The proposed algorithm uses the KCF tracker to improve the capability of the algorithm to adapt to different scenes, such as occlusion, illumination change, and motion blur. Furthermore, the proposed algorithm uses the optical flow method to narrow the detection area. Consequently, the tracking speed of the algorithm is improved. The experimental results show that the proposed algorithm exhibits better tracking performance than the reference algorithm in most cases and achieves favorable tracking robustness in an extensive tracking process.关键词:visual tracking;TLD(tracking-learning-detection);kernel correlation;optical flow method;detection area adjustment12|4|3更新时间:2024-05-07

摘要:Objective Visual tracking is widely applied in fields, such as video surveillance, human-computer interaction, and intelligent transportation, at present. In recent years, domestic and foreign researchers have proposed numerous tracking algorithms for this purpose. When applied to practical use, these algorithms are required to track a target extensively. However, continuously tracking a target is difficult for most algorithms given the complexity of the tracking scenario. Therefore, conducting rapid and robust tracking of a target is a key issue that must be solved when applying visual target tracking technology to practical use. TLD algorithm provides an effective solution to this issue. This study improves two aspects of the TLD algorithm to improve its tracking performance.Method First, a scale adaptive kernel correlation filter (KCF) is used as a tracker in the tracking module. The KCF algorithm cannot adapt to the scale change of the target because the size of the filter template is fixed. However, the detection module of the TLD algorithm has a certain scale adaptability. Therefore, the proposed algorithm utilizes the scale adaptive capabilities of the detection module to measure the scale of the region of interest of the KCF tracker. Moreover, the scale adjustments can enable the KCF tracker to achieve an improved tracking precision. The algorithm uses the detection module to assess the accuracy of the results of the tracking, module and selectively updates the KCF filter template in accordance with the assessed result because the tracking and detection modules are independent of each other. Second, an optical flow method in the detection module is used to preliminarily predict a target position. The optical flow method is used to estimate the target movement between two adjacent frames without any prior knowledge. The target detection area is set in accordance with the predicted position, and the size of the detection area is proportional to the target size. A three-layer cascade classifier is used to locate the target accurately after dynamically adjusting the target detection area. An anti-interference capability of the algorithm to similar objects in the scene is enhanced since the target motion information is introduced.ResultTwo sets of experiments are conducted to verify the superiority of the proposed algorithm. The first set of experiments is conducted on the OTB2013 and Temple Color 128 data platforms. The OTB2013 data platform has 50 sets of video sequences, and the Temple Color 128 data platform has 128 sets of video sequences. Results show that the tracking accuracy and success rate of the algorithm on the OTB2013 data platform are 0.761 and 0.559, respectively, and the tracking accuracy and success rate of the algorithm on the Temple Color 128 data platform are 0.678 and 0.481, correspondingly. The proposed algorithm is compared with six state-of-the art algorithms, namely, DSST, KCF, CNT, Struck, TLD, and DLT. Among all the algorithms, the proposed algorithm exhibits the optimum performance on the two data platforms, Besides, the. The average tracking speed of all test videos reaches 27.92 frame/s, thereby indicating a favorable real-time performance. In another set of experiments, the proposed algorithm and three other improved algorithms are tested and compared with the randomly selected eight sets of video sequences. The experimental results show that the proposed algorithm has the smallest center position error of 14.01, the largest overlap rate of 72.2%, and the fastest tracking speed of 26.23 frame/s, thus denoting that the proposed algorithm achieves the optimum tracking performance among all of the improved algorithms.Conclusion The proposed algorithm uses the KCF tracker to improve the capability of the algorithm to adapt to different scenes, such as occlusion, illumination change, and motion blur. Furthermore, the proposed algorithm uses the optical flow method to narrow the detection area. Consequently, the tracking speed of the algorithm is improved. The experimental results show that the proposed algorithm exhibits better tracking performance than the reference algorithm in most cases and achieves favorable tracking robustness in an extensive tracking process.关键词:visual tracking;TLD(tracking-learning-detection);kernel correlation;optical flow method;detection area adjustment12|4|3更新时间:2024-05-07

Image Understanding and Computer Vision

-

摘要:Objective Freeform deformation techniques of curves and surfaces have received considerable attention recently with the rapid development of geometric modeling and computer animation. A new local deformation algorithm for curves based on progressive iterative approximation (PIA) and dominant point methods is proposed in this study to acquire interesting and lifelike deformation effects. The new deformation method not only produces various deformation effects but also possesses desirable properties, such as flexibility and convergence, given the PIA method.Method First, the initial deformation curves are obtained using the PIA or least squares PIA method. Second, the dominant points, which include the maximum local curvature points, and two end points are selected from the initial control points by calculating and comparing the curvatures that correspond to the control points. In this phase, we detect the maximum local curvature points using the rule that the curvature of a point must be larger than the curvatures of its neighboring points. Thus, the maximum local curvature points can be selected as the dominant points. Then, an extension rule is constructed on the region expected to be deformed. That is, we extend this region along the curve until it satisfies the two closest dominant points after selecting the region for deformation in accordance with real requirements. Thus, we can obtain a segment that is bounded by two dominant points. We classify the situation into two categories using the abovementioned extension rule on the basis of the number of dominant points in the obtained segment. The control points, which will be adjusted subsequently, are selected in accordance with the dominant points and the shape information of the curves. If the region that is prepared for deformation contains a dominant point, then three dominant points in the segment will be obtained after applying such extension rule. The dominant point in the middle is selected for subsequent adjustment. If the region that is prepared for deformation does not contain any dominant point, then only two dominant points in the segment will be obtained after extension. We select a control point in accordance with the shape information of the curve, which is useful in handling several complex deformation problems. In this situation, we first calculate the shape parameter for each control point in the obtained segment. The shape parameter represents the complexity of the curve and indicates the difference between two adjacent segments. Second, the control point that has the smallest shape parameter is selected for subsequent adjustment after comparing these parameters. We call this procedure the dominant point method. Moreover, if more than one dominant point in the region is expected to be deformed, then we can split the segment in accordance with the distribution of dominant points to ensure that each segment contains less than one dominant point. Then, we can use the abovementioned dominant point method to select the control points. Finally, local progressive iterative approximation (LPIA) method is adopted to generate the final curves after local deformation.Result The proposed deformation method selects the control points on the basis of the complexity of the shapes of the curves. The deformation method is convergent, can be executed flexibly, and can highlight the features of the deformed regions through local iteration because we use the LPIA method to fit the data set after adjustment. Numerical examples, such as teapot, face contour, and hand, show that the proposed method can obtain favorable deformation effects through the B-spline basis, which is the most commonly used basis in geometric design and exhibits excellent local properties. Specifically, the teapot mouth is stretched by adjusting the selected control point. The lips, eyebrows, and earlobes of the face contour are deformed by using the deformation algorithm to generate a fascinating face. The fingers are also stretched to make the hand natural. We also demonstrate the distortion, which occurs when we do not use the deformation method proposed in this study, as illustrated in the teapot example. We can clearly observe that, if we do not adjust the control points generated by the algorithm, then the curve after deformation will be distorted and lack reality. Furthermore, this algorithm can be used repeatedly to generate the global, local, periodic, and elastic deformation effects.Conclusion This study mainly focuses on applying the PIA method to the local curve deformations. First, we discuss the PIA method, which presents an intuitive and straightforward approach to fitting data points and provides flexibility for shape control in data fitting. Second, we introduce the notion of dominant points into the curve deformations. Finally, we propose a new deformation method on the basis of the PIA and dominant point methods by combining the two techniques. The algorithm not only possesses the properties of convergence and stability through the PIA method but also produces various positive deformation effects by selecting dominant points. A user must only select the regions expected to be deformed during the implementation of the deformation method and determine the deformation scales in accordance with his/her requirements, thus guaranteeing the interactivity of this algorithm. In summary, the proposed method significantly enriches the deformation effects of curves.关键词:progressive iterative approximation;curve deformation;local curvature;deformation effect;computer aided design12|4|0更新时间:2024-05-07

摘要:Objective Freeform deformation techniques of curves and surfaces have received considerable attention recently with the rapid development of geometric modeling and computer animation. A new local deformation algorithm for curves based on progressive iterative approximation (PIA) and dominant point methods is proposed in this study to acquire interesting and lifelike deformation effects. The new deformation method not only produces various deformation effects but also possesses desirable properties, such as flexibility and convergence, given the PIA method.Method First, the initial deformation curves are obtained using the PIA or least squares PIA method. Second, the dominant points, which include the maximum local curvature points, and two end points are selected from the initial control points by calculating and comparing the curvatures that correspond to the control points. In this phase, we detect the maximum local curvature points using the rule that the curvature of a point must be larger than the curvatures of its neighboring points. Thus, the maximum local curvature points can be selected as the dominant points. Then, an extension rule is constructed on the region expected to be deformed. That is, we extend this region along the curve until it satisfies the two closest dominant points after selecting the region for deformation in accordance with real requirements. Thus, we can obtain a segment that is bounded by two dominant points. We classify the situation into two categories using the abovementioned extension rule on the basis of the number of dominant points in the obtained segment. The control points, which will be adjusted subsequently, are selected in accordance with the dominant points and the shape information of the curves. If the region that is prepared for deformation contains a dominant point, then three dominant points in the segment will be obtained after applying such extension rule. The dominant point in the middle is selected for subsequent adjustment. If the region that is prepared for deformation does not contain any dominant point, then only two dominant points in the segment will be obtained after extension. We select a control point in accordance with the shape information of the curve, which is useful in handling several complex deformation problems. In this situation, we first calculate the shape parameter for each control point in the obtained segment. The shape parameter represents the complexity of the curve and indicates the difference between two adjacent segments. Second, the control point that has the smallest shape parameter is selected for subsequent adjustment after comparing these parameters. We call this procedure the dominant point method. Moreover, if more than one dominant point in the region is expected to be deformed, then we can split the segment in accordance with the distribution of dominant points to ensure that each segment contains less than one dominant point. Then, we can use the abovementioned dominant point method to select the control points. Finally, local progressive iterative approximation (LPIA) method is adopted to generate the final curves after local deformation.Result The proposed deformation method selects the control points on the basis of the complexity of the shapes of the curves. The deformation method is convergent, can be executed flexibly, and can highlight the features of the deformed regions through local iteration because we use the LPIA method to fit the data set after adjustment. Numerical examples, such as teapot, face contour, and hand, show that the proposed method can obtain favorable deformation effects through the B-spline basis, which is the most commonly used basis in geometric design and exhibits excellent local properties. Specifically, the teapot mouth is stretched by adjusting the selected control point. The lips, eyebrows, and earlobes of the face contour are deformed by using the deformation algorithm to generate a fascinating face. The fingers are also stretched to make the hand natural. We also demonstrate the distortion, which occurs when we do not use the deformation method proposed in this study, as illustrated in the teapot example. We can clearly observe that, if we do not adjust the control points generated by the algorithm, then the curve after deformation will be distorted and lack reality. Furthermore, this algorithm can be used repeatedly to generate the global, local, periodic, and elastic deformation effects.Conclusion This study mainly focuses on applying the PIA method to the local curve deformations. First, we discuss the PIA method, which presents an intuitive and straightforward approach to fitting data points and provides flexibility for shape control in data fitting. Second, we introduce the notion of dominant points into the curve deformations. Finally, we propose a new deformation method on the basis of the PIA and dominant point methods by combining the two techniques. The algorithm not only possesses the properties of convergence and stability through the PIA method but also produces various positive deformation effects by selecting dominant points. A user must only select the regions expected to be deformed during the implementation of the deformation method and determine the deformation scales in accordance with his/her requirements, thus guaranteeing the interactivity of this algorithm. In summary, the proposed method significantly enriches the deformation effects of curves.关键词:progressive iterative approximation;curve deformation;local curvature;deformation effect;computer aided design12|4|0更新时间:2024-05-07 -

摘要:Objective An importance sampling method based on bidirectional reflectance distribution function (BRDF) has excellent fidelity when rendering the surface of an object. However, this sampling method has a complicated form and can lead to heavy hardware storage cost, which can cause many problems when applied to practical use. These problems include high implementation complexity, low execution efficiency, and high debugging difficulty. Owing to these problems, this study provides a new method for computing the reflection direction of a light path. The new method uses weight generation technique and vector linear interpolation. This method not only reduces the complexity of the algorithm but also reduces the computational complexity of many previous sampling algorithms. The new method is also easy to implement.Method The algorithm initially calculates the direction of reflected light and subsequently combines the features of cosine and exponential functions given the direction of incident light and surface normal. This algorithm generates a weight value that has a certain distribution characteristic. The algorithm defines a parameter called ε to enable the distribution characteristic to be controllable. The surface tends to exhibit a diffuse reflection for each incoming light ray when ε is relatively small. Otherwise, the surface tends to exhibit an ideal mirror reflection. The new algorithm performs a linear interpolation between a mirror and diffuse reflection directions to obtain a new vector after the weight generation process, and the weight that was generated previously was used in this process. Finally, the algorithm obtains the desired reflection direction of a light ray by normalizing the new vector. This method efficiently simulates glossy surfaces, which exist vastly in real life.Result This study conducts a full implementation of the path tracing algorithm. The new algorithm is based on the new sampling method described previously. Nine kinds of common surface materials are selected for the rendering test through this algorithm. Experimental results are compared with the actual results obtained by the original BRDF sampling algorithm. The original data size for the BRDF parameter of each actual surface is approximately 34 MB. Notably, storing the raw BRDF data when the scene contains various material surfaces is infeasible. The rendering speed can be increased by 1.521.99 times using the fast sampling algorithm, and the relative error caused by approximation can be controlled within 8%. Moreover, the original 34 MB data used to describe the surface of the object can be replaced by only storing few floating-point numbers, which can apparently reduce hardware storage overhead considerably. This sampling method has a low hardware storage cost, and its rendered picture can still retain a high degree of realism. These features are favorable for modern hardware that is specifically designed for solving high computational complexity problems but limited to memory bandwidth. The object being rendered can achieve a smooth transition from an ideal diffuse to specular reflection and ideal mirror reflection because a smoothness parameter changes continuously. Moreover, the new algorithm unifies the sampling method used in many path tracing renderers. These renderers frequently use different sampling models when rendering various types of material surfaces to improve rendering quality. This improvement will inevitably increase thread divergence when rendering and considerably reduce the operating efficiency of the rendering program. These drawbacks are particularly evident on parallel hardware, such as GPUs, and must be avoided to a feasible extent. Thus, this algorithm condenses different rendering models used by various types of renderers and obtains a unified sampling method, even when the material properties of the surface are relatively complex to render correctly. The algorithm can also be appropriately extended and approximated by a multilayer fitting technique to simulate the material properties of the rendered surface. The algorithm has favorable scalability and practicality.Conclusion This study uses a simplified algorithm to compute the exit direction of light to replace the traditional method used in path tracing renderers without sacrificing the authenticity of the rendered image. This study also fully implements the path tracing algorithm to enable its practical application. This algorithm can effectively simulate mirror, diffuse, and glossy reflection, has extensive applications when rendering various kinds of objects that exist in real life, and can be used as an alternative approach to replace existing sampling methods. This algorithm has a low storage overhead, which is an advantage when rendering complex scenes that contain various materials without consuming numerous memory resources. This algorithm also exhibits excellent performance when rendering common isotropic materials, such as rough or diffuse surfaces, porcelain, and metals.关键词:computer graphics;path tracing;bidirectional reflectance distribution function;bidirectional reflectance distribution function(BRDF);fast sampling;GPU25|4|0更新时间:2024-05-07

摘要:Objective An importance sampling method based on bidirectional reflectance distribution function (BRDF) has excellent fidelity when rendering the surface of an object. However, this sampling method has a complicated form and can lead to heavy hardware storage cost, which can cause many problems when applied to practical use. These problems include high implementation complexity, low execution efficiency, and high debugging difficulty. Owing to these problems, this study provides a new method for computing the reflection direction of a light path. The new method uses weight generation technique and vector linear interpolation. This method not only reduces the complexity of the algorithm but also reduces the computational complexity of many previous sampling algorithms. The new method is also easy to implement.Method The algorithm initially calculates the direction of reflected light and subsequently combines the features of cosine and exponential functions given the direction of incident light and surface normal. This algorithm generates a weight value that has a certain distribution characteristic. The algorithm defines a parameter called ε to enable the distribution characteristic to be controllable. The surface tends to exhibit a diffuse reflection for each incoming light ray when ε is relatively small. Otherwise, the surface tends to exhibit an ideal mirror reflection. The new algorithm performs a linear interpolation between a mirror and diffuse reflection directions to obtain a new vector after the weight generation process, and the weight that was generated previously was used in this process. Finally, the algorithm obtains the desired reflection direction of a light ray by normalizing the new vector. This method efficiently simulates glossy surfaces, which exist vastly in real life.Result This study conducts a full implementation of the path tracing algorithm. The new algorithm is based on the new sampling method described previously. Nine kinds of common surface materials are selected for the rendering test through this algorithm. Experimental results are compared with the actual results obtained by the original BRDF sampling algorithm. The original data size for the BRDF parameter of each actual surface is approximately 34 MB. Notably, storing the raw BRDF data when the scene contains various material surfaces is infeasible. The rendering speed can be increased by 1.521.99 times using the fast sampling algorithm, and the relative error caused by approximation can be controlled within 8%. Moreover, the original 34 MB data used to describe the surface of the object can be replaced by only storing few floating-point numbers, which can apparently reduce hardware storage overhead considerably. This sampling method has a low hardware storage cost, and its rendered picture can still retain a high degree of realism. These features are favorable for modern hardware that is specifically designed for solving high computational complexity problems but limited to memory bandwidth. The object being rendered can achieve a smooth transition from an ideal diffuse to specular reflection and ideal mirror reflection because a smoothness parameter changes continuously. Moreover, the new algorithm unifies the sampling method used in many path tracing renderers. These renderers frequently use different sampling models when rendering various types of material surfaces to improve rendering quality. This improvement will inevitably increase thread divergence when rendering and considerably reduce the operating efficiency of the rendering program. These drawbacks are particularly evident on parallel hardware, such as GPUs, and must be avoided to a feasible extent. Thus, this algorithm condenses different rendering models used by various types of renderers and obtains a unified sampling method, even when the material properties of the surface are relatively complex to render correctly. The algorithm can also be appropriately extended and approximated by a multilayer fitting technique to simulate the material properties of the rendered surface. The algorithm has favorable scalability and practicality.Conclusion This study uses a simplified algorithm to compute the exit direction of light to replace the traditional method used in path tracing renderers without sacrificing the authenticity of the rendered image. This study also fully implements the path tracing algorithm to enable its practical application. This algorithm can effectively simulate mirror, diffuse, and glossy reflection, has extensive applications when rendering various kinds of objects that exist in real life, and can be used as an alternative approach to replace existing sampling methods. This algorithm has a low storage overhead, which is an advantage when rendering complex scenes that contain various materials without consuming numerous memory resources. This algorithm also exhibits excellent performance when rendering common isotropic materials, such as rough or diffuse surfaces, porcelain, and metals.关键词:computer graphics;path tracing;bidirectional reflectance distribution function;bidirectional reflectance distribution function(BRDF);fast sampling;GPU25|4|0更新时间:2024-05-07

Computer Graphics

-