最新刊期

卷 23 , 期 10 , 2018

-

摘要:ObjectiveThe appearance of generative adversarial networks (GANs) provides a new approach and a framework for the application of computer vision.GAN generates high-quality samples with unique zero-sum game and adversarial training concepts, and therefore more powerful in both feature learning and representation than traditional machine learning algorithms.Remarkable achievements have been realized in the field of computer vision, especially in sample generation, which is one of the popular topics in current research.MethodThe research and application of different GAN models based on computer vision are reviewed based on the extensive research and the latest achievements of relevant literature.The typical GAN network methods are introduced, categorized, and compared in experiments by using generation samples to present their performance and summarized the research status and development trends in computer vision fields, such as high-quality image generation, style transfer and image translation, text-image mutual generation, image inpainting, and restoration.Finally, existing major research problems are summarized and discussed, and potential future research directions are presented.ResultSince the emergence of GAN, many variations have been proposed for different fields, either structural improvement or development of theory or innovation in applications.Different GAN models have advantages and disadvantages in terms of generating examples, have significant achievements in many fields, especially the computer vision, and can generate examples such as the real ones.However, they also have unique problems, such as non-convergence, model collapse, and uncontrollability due to high degree-of-freedom.Priori hypotheses about the data in the original GAN, whose final goals are to realize infinite modeling power and fit for all distributions, hardly exits.In addition, the designs of GAN models are simple.A complex function model need not be pre-designed, and the generator and the discriminator can work normally with the back propagation algorithm.Moreover, GAN can use a machine to interact with other machines through continuous confrontation and learn the inherent laws in the real world with sufficient data training.Each aspect has two sides, and a series of problems are hidden behind the goal of infinite modeling.The generation process is extremely flexible that the stability and convergence of the training process cannot be guaranteed.Model collapse will likely occur and further training cannot be achieved.The original GAN has the following problems:disappearance of gradients, training difficulties, the losses of generator and discriminator cannot indicate the training process, the lack of diversities in the generated samples, and easy over-fitting.Discrete distributions are also difficult to generate due to the limitations of GAN.Many researchers have proposed new ways to address these problems, and several landmark models, such as DCGAN, CGAN, WGAN, WGAN-GP, EBGAN, BEGAN, InfoGAN, and LSGAN, have been introduced.DCGAN combines GAN with CNN and performs well in the field of computer vision.Furthermore, DCGAN sets a series of limitations for the CNN network so it can be trained stably and use the learned feature representation for sample generation and image classification.CGAN inputs the conditional variable (c) with the random variable (z) and the real data (x) to guide the data generation process.The conditional variable (c) can be category labels, texts, and generated targets.The straightforward improvement proves to be extremely effective and has been widely used in subsequent work.WGAN uses the Wasserstein distance to measure the distance between the real and generated samples instead of the JS divergence.The Wasserstein distance has the following advantages.It can measure distance even if the two distributions do not overlap, has excellent smoothing properties, and can solve the gradients disappearance problem to some degrees.In addition, WGAN solves the problems of instability in training, diversifies the generated examples, and does not require the careful balancing of the training of G and D.WGAN-GP replaces the weight pruning in WGAN to implement the Lipschitz constraint method.Experiments show that the quality of samples generated by WGAN-GP is higher than those of WGAN.It also provides stable training without hyperparameters and successfully trains various generating tasks.However, the convergence speed of WGAN-GP is slower, that is, it takes more time to converge under the same dataset.The EBGAN interprets GAN from the perspective of energy.It can learn the probability distributions of images with low convergence speed.The images BEGAN products are still disorganized, whereas other models have been able to express the outline of the objects roughly.However, the images generated by BEGAN have the sharpest edges and rich image diversities in the experiments.The discriminator of BEGAN draws lessons from EBGAN, and the loss of generator refers to the loss of WGAN.It also proposes a hyper parameter that can measure the diversity of generated samples to balance D and G and stabilize the training process.The internal texture of the generated images of InfoGAN is poor, and the shape of the generated objects is the same.As for the generator, in addition to the input noise (z), a controllable variable (c) is added, which contains interpretable information about the data to control the generative results, resulting in poor diversity.LSGAN can generate high quality examples because the object function of least squares loss replaces the cross-entropy loss, which partly solves the two shortcomings (i.e., low-quality and instability of training process).ConclusionGAN has significant theoretical and practical values as a new generative model.It provides a good solution to problems of insufficient sample, poor quality of generation, and difficulties in extracting features.GAN is an inclusive framework that can be combined with most deep learning algorithms to solve problems that traditional machine learning algorithms cannot solve.However, it has theoretical problems that must be solved urgently.How to generate high-quality examples and a realistic scene is worth studying.Further GAN developments are predicted in the following areas:breakthrough of theory, development of algorithm, system of evaluation, system of specialism, and combination of industry.关键词:generative adversarial networks;computer vision;image generation;style transfer;image inpainting14|18|26更新时间:2024-05-07

摘要:ObjectiveThe appearance of generative adversarial networks (GANs) provides a new approach and a framework for the application of computer vision.GAN generates high-quality samples with unique zero-sum game and adversarial training concepts, and therefore more powerful in both feature learning and representation than traditional machine learning algorithms.Remarkable achievements have been realized in the field of computer vision, especially in sample generation, which is one of the popular topics in current research.MethodThe research and application of different GAN models based on computer vision are reviewed based on the extensive research and the latest achievements of relevant literature.The typical GAN network methods are introduced, categorized, and compared in experiments by using generation samples to present their performance and summarized the research status and development trends in computer vision fields, such as high-quality image generation, style transfer and image translation, text-image mutual generation, image inpainting, and restoration.Finally, existing major research problems are summarized and discussed, and potential future research directions are presented.ResultSince the emergence of GAN, many variations have been proposed for different fields, either structural improvement or development of theory or innovation in applications.Different GAN models have advantages and disadvantages in terms of generating examples, have significant achievements in many fields, especially the computer vision, and can generate examples such as the real ones.However, they also have unique problems, such as non-convergence, model collapse, and uncontrollability due to high degree-of-freedom.Priori hypotheses about the data in the original GAN, whose final goals are to realize infinite modeling power and fit for all distributions, hardly exits.In addition, the designs of GAN models are simple.A complex function model need not be pre-designed, and the generator and the discriminator can work normally with the back propagation algorithm.Moreover, GAN can use a machine to interact with other machines through continuous confrontation and learn the inherent laws in the real world with sufficient data training.Each aspect has two sides, and a series of problems are hidden behind the goal of infinite modeling.The generation process is extremely flexible that the stability and convergence of the training process cannot be guaranteed.Model collapse will likely occur and further training cannot be achieved.The original GAN has the following problems:disappearance of gradients, training difficulties, the losses of generator and discriminator cannot indicate the training process, the lack of diversities in the generated samples, and easy over-fitting.Discrete distributions are also difficult to generate due to the limitations of GAN.Many researchers have proposed new ways to address these problems, and several landmark models, such as DCGAN, CGAN, WGAN, WGAN-GP, EBGAN, BEGAN, InfoGAN, and LSGAN, have been introduced.DCGAN combines GAN with CNN and performs well in the field of computer vision.Furthermore, DCGAN sets a series of limitations for the CNN network so it can be trained stably and use the learned feature representation for sample generation and image classification.CGAN inputs the conditional variable (c) with the random variable (z) and the real data (x) to guide the data generation process.The conditional variable (c) can be category labels, texts, and generated targets.The straightforward improvement proves to be extremely effective and has been widely used in subsequent work.WGAN uses the Wasserstein distance to measure the distance between the real and generated samples instead of the JS divergence.The Wasserstein distance has the following advantages.It can measure distance even if the two distributions do not overlap, has excellent smoothing properties, and can solve the gradients disappearance problem to some degrees.In addition, WGAN solves the problems of instability in training, diversifies the generated examples, and does not require the careful balancing of the training of G and D.WGAN-GP replaces the weight pruning in WGAN to implement the Lipschitz constraint method.Experiments show that the quality of samples generated by WGAN-GP is higher than those of WGAN.It also provides stable training without hyperparameters and successfully trains various generating tasks.However, the convergence speed of WGAN-GP is slower, that is, it takes more time to converge under the same dataset.The EBGAN interprets GAN from the perspective of energy.It can learn the probability distributions of images with low convergence speed.The images BEGAN products are still disorganized, whereas other models have been able to express the outline of the objects roughly.However, the images generated by BEGAN have the sharpest edges and rich image diversities in the experiments.The discriminator of BEGAN draws lessons from EBGAN, and the loss of generator refers to the loss of WGAN.It also proposes a hyper parameter that can measure the diversity of generated samples to balance D and G and stabilize the training process.The internal texture of the generated images of InfoGAN is poor, and the shape of the generated objects is the same.As for the generator, in addition to the input noise (z), a controllable variable (c) is added, which contains interpretable information about the data to control the generative results, resulting in poor diversity.LSGAN can generate high quality examples because the object function of least squares loss replaces the cross-entropy loss, which partly solves the two shortcomings (i.e., low-quality and instability of training process).ConclusionGAN has significant theoretical and practical values as a new generative model.It provides a good solution to problems of insufficient sample, poor quality of generation, and difficulties in extracting features.GAN is an inclusive framework that can be combined with most deep learning algorithms to solve problems that traditional machine learning algorithms cannot solve.However, it has theoretical problems that must be solved urgently.How to generate high-quality examples and a realistic scene is worth studying.Further GAN developments are predicted in the following areas:breakthrough of theory, development of algorithm, system of evaluation, system of specialism, and combination of industry.关键词:generative adversarial networks;computer vision;image generation;style transfer;image inpainting14|18|26更新时间:2024-05-07 -

摘要:ObjectivePulmonary disorder has high morbidity and mortality world-wide according to the reports by the World Health Organization.Some common pulmonary disorders include lung nodule and cancer, interstitial lung disease, chronic obstructive pulmonary disease, bronchiectasis, and pulmonary embolism.The disorders are typically characterized by long-term poor breath quality, irregular blood supply, and obstructive airflow and circulation.Pulmonary disorders bring not only enormous societal financial burden but also physical and mental suffering to patients.Thus, the recognition and comprehension of the disorders are widely considered the most basic and crucial medical tasks.High-resolution multi-slice computed tomography (CT) has received significant attention from pulmonologists and radiologists due to its allowance of investigating pulmonary anatomic function, assessing physiological conditions, and detecting and diagnosing pulmonary disorders.Hundreds of isotropic thin slices that are reconstructed real time from a single spiral CT scan can realize objective, repeatable, and non-invasive clinical inspections unlike traditional tools, especially in the early disease stage.However, the manual delineation, measurement, and evaluation of volumetric scans are extremely time-consuming and entail intensively laborious work load for clinicians.Therefore, the biomedical engineering community aims to develop semi-automated and fully automated segmentations in CT images through voxel-by-voxel labeling by using computer software to separate sub-divided pulmonary anatomic structures from one another.In the presence of unique inter-and intra-anatomy relationships and the impact of imaging defects, abnormalities, or other interference factors, classical image processing methods suffer from performance limitations.The anatomic CT visibility is attenuated and the morphology is deformed spatially and pathologically, which affect the segmentation results negatively.Several studies, usually incorporating traditional work and carefully defined processing rules, that focus on thoracic CT images have been employed.MethodIn this paper, a systematic review of anatomic segmentation methods for pulmonary tissues, airways, vasculatures, fissures, and lobes is presented by tracking and summarizing the representative or up-to-date published literature.In addition, several attractive practices and extractions of sub-divided or related structures, derived from segmented anatomic results, are also attached to corresponding anatomic subsections.They include the segmentation of adhesive, pleura nodular, and interstitial diseased lungs; the centerline extraction of airways and vasculatures; airway wall quantification and segmentation; pulmonary artery and vein separation; and pulmonary segment approximation.Moreover, for all the referred segmentation methods, the full implementation pipeline, background image processing methodology, and key techniques are presented to elaborate the result performance.Analogous methods are further classified on the basis of their designed frameworks or mathematical theories, and the merits and demerits of each method type are analyzed at the end of the classification content.In general, evaluating segmentations and comparing with other work are tedious for researchers mainly because of the difficulty in obtaining the ground truth.LOLA11, EXACT09, and VESSEL12 are three of the public and authoritative MICCAI grand challenges in chest image analysis.They are introduced for result comparisons in the directions of pulmonary tissue and lobe, airway, and vasculature.The complete procedure renders it difficult for the owners to construct their reference repository and quantify the submission performance.The reported standard generation approaches to other anatomic-based applications are illuminated in parallel.The evaluated indices contain the anatomic boundary alignment and volume overlap and the trade-off between true and false positive detection.Subsequently, the experimental validation approaches are explained based on the reference standard and indices.The qualitative and quantitative results of different methods are shown specifically with the description of the test datasets.ResultOn the basis of the individual anatomic topic, the existing challenges of state-of-the-art studies are presented in detail, highlighting the accuracy performance in true positive detection and false positive removal and the robustness performance against the diversity of CT scanners, imaging protocols, and the appearance of various abnormalities.A set of practical problems, such as lesion location, qualitative and quantitative anatomic measurement, and sequential component segmentation, are also discussed in the paper.Besides deep learning algorithms, convolutional neural network-based algorithms have rapidly become a preference of medical imaging institutions.At present, two mature chest CT imaging fields exist, namely, nodule detection and malignancy prediction in lung cancer screening and interstitial lung disease type classification.The major deep learning-based efforts are surveyed in terms of their contribution to pulmonary tissue bounding box localization and pulmonary airway segmentation and leakage removal, most of which were proposed in the recent two years.The improvements are compared with previous methods.In terms of the frontier requirements from scientific groups, industrial units, and pulmonology domains, future work trends and open issues are listed pertinent to methodology and post-processing steps along with the applications, such as the identification of pulmonary lesion sites and the subsequent task of anatomic segmentation.The parameters of anatomic measurement are of vital importance in characterizing the progress and severity of pulmonary disorders.Thus, several possible innovative points to achieve these biomarkers are also recommended.ConclusionTheoretical studies and clinical practices will benefit from implementing accurate, fast, and robust pulmonary anatomic segmentation from large amounts of CT images.The transfer or modification of successful pulmonary segmentation methodologies will facilitate the segmentation of other tissues, organs, and multi-modality images.Inter-and intra-structure measurements and the relationship mapping information obtained, ranging from global to local analyses, can provide objective and effective evidence for computer-aided pulmonary disease detection and diagnosis.The 2D transversal or 3D visualization of these points can present intuitive, legible, and proportional views of the anatomic structures with the help of volume rendering techniques and grayscale Dicom slices overlaid by chromatic tissue marks.The contribution of these aspects reduces the labor requirement from pulmonologists and radiologists, thereby increasing their efficiency significantly.Although deep learning algorithms are relatively immature and require improvement in terms of segmentation time cost and refinement steps, they have considerable potential and are worth studying for pulmonary segmentation areas, which are predicted to dominate the field in the coming years.关键词:CT images;pulmonary segmentation;pulmonary airway segmentation;pulmonary vasculature segmentation;pulmonary lobe segmentation22|19|2更新时间:2024-05-07

摘要:ObjectivePulmonary disorder has high morbidity and mortality world-wide according to the reports by the World Health Organization.Some common pulmonary disorders include lung nodule and cancer, interstitial lung disease, chronic obstructive pulmonary disease, bronchiectasis, and pulmonary embolism.The disorders are typically characterized by long-term poor breath quality, irregular blood supply, and obstructive airflow and circulation.Pulmonary disorders bring not only enormous societal financial burden but also physical and mental suffering to patients.Thus, the recognition and comprehension of the disorders are widely considered the most basic and crucial medical tasks.High-resolution multi-slice computed tomography (CT) has received significant attention from pulmonologists and radiologists due to its allowance of investigating pulmonary anatomic function, assessing physiological conditions, and detecting and diagnosing pulmonary disorders.Hundreds of isotropic thin slices that are reconstructed real time from a single spiral CT scan can realize objective, repeatable, and non-invasive clinical inspections unlike traditional tools, especially in the early disease stage.However, the manual delineation, measurement, and evaluation of volumetric scans are extremely time-consuming and entail intensively laborious work load for clinicians.Therefore, the biomedical engineering community aims to develop semi-automated and fully automated segmentations in CT images through voxel-by-voxel labeling by using computer software to separate sub-divided pulmonary anatomic structures from one another.In the presence of unique inter-and intra-anatomy relationships and the impact of imaging defects, abnormalities, or other interference factors, classical image processing methods suffer from performance limitations.The anatomic CT visibility is attenuated and the morphology is deformed spatially and pathologically, which affect the segmentation results negatively.Several studies, usually incorporating traditional work and carefully defined processing rules, that focus on thoracic CT images have been employed.MethodIn this paper, a systematic review of anatomic segmentation methods for pulmonary tissues, airways, vasculatures, fissures, and lobes is presented by tracking and summarizing the representative or up-to-date published literature.In addition, several attractive practices and extractions of sub-divided or related structures, derived from segmented anatomic results, are also attached to corresponding anatomic subsections.They include the segmentation of adhesive, pleura nodular, and interstitial diseased lungs; the centerline extraction of airways and vasculatures; airway wall quantification and segmentation; pulmonary artery and vein separation; and pulmonary segment approximation.Moreover, for all the referred segmentation methods, the full implementation pipeline, background image processing methodology, and key techniques are presented to elaborate the result performance.Analogous methods are further classified on the basis of their designed frameworks or mathematical theories, and the merits and demerits of each method type are analyzed at the end of the classification content.In general, evaluating segmentations and comparing with other work are tedious for researchers mainly because of the difficulty in obtaining the ground truth.LOLA11, EXACT09, and VESSEL12 are three of the public and authoritative MICCAI grand challenges in chest image analysis.They are introduced for result comparisons in the directions of pulmonary tissue and lobe, airway, and vasculature.The complete procedure renders it difficult for the owners to construct their reference repository and quantify the submission performance.The reported standard generation approaches to other anatomic-based applications are illuminated in parallel.The evaluated indices contain the anatomic boundary alignment and volume overlap and the trade-off between true and false positive detection.Subsequently, the experimental validation approaches are explained based on the reference standard and indices.The qualitative and quantitative results of different methods are shown specifically with the description of the test datasets.ResultOn the basis of the individual anatomic topic, the existing challenges of state-of-the-art studies are presented in detail, highlighting the accuracy performance in true positive detection and false positive removal and the robustness performance against the diversity of CT scanners, imaging protocols, and the appearance of various abnormalities.A set of practical problems, such as lesion location, qualitative and quantitative anatomic measurement, and sequential component segmentation, are also discussed in the paper.Besides deep learning algorithms, convolutional neural network-based algorithms have rapidly become a preference of medical imaging institutions.At present, two mature chest CT imaging fields exist, namely, nodule detection and malignancy prediction in lung cancer screening and interstitial lung disease type classification.The major deep learning-based efforts are surveyed in terms of their contribution to pulmonary tissue bounding box localization and pulmonary airway segmentation and leakage removal, most of which were proposed in the recent two years.The improvements are compared with previous methods.In terms of the frontier requirements from scientific groups, industrial units, and pulmonology domains, future work trends and open issues are listed pertinent to methodology and post-processing steps along with the applications, such as the identification of pulmonary lesion sites and the subsequent task of anatomic segmentation.The parameters of anatomic measurement are of vital importance in characterizing the progress and severity of pulmonary disorders.Thus, several possible innovative points to achieve these biomarkers are also recommended.ConclusionTheoretical studies and clinical practices will benefit from implementing accurate, fast, and robust pulmonary anatomic segmentation from large amounts of CT images.The transfer or modification of successful pulmonary segmentation methodologies will facilitate the segmentation of other tissues, organs, and multi-modality images.Inter-and intra-structure measurements and the relationship mapping information obtained, ranging from global to local analyses, can provide objective and effective evidence for computer-aided pulmonary disease detection and diagnosis.The 2D transversal or 3D visualization of these points can present intuitive, legible, and proportional views of the anatomic structures with the help of volume rendering techniques and grayscale Dicom slices overlaid by chromatic tissue marks.The contribution of these aspects reduces the labor requirement from pulmonologists and radiologists, thereby increasing their efficiency significantly.Although deep learning algorithms are relatively immature and require improvement in terms of segmentation time cost and refinement steps, they have considerable potential and are worth studying for pulmonary segmentation areas, which are predicted to dominate the field in the coming years.关键词:CT images;pulmonary segmentation;pulmonary airway segmentation;pulmonary vasculature segmentation;pulmonary lobe segmentation22|19|2更新时间:2024-05-07

Review

-

摘要:ObjectiveImage steganalysis is the opposite technology of steganography; it aims to detect, extract, restore, and destroy secret messages embedded in cover images.As an important technical tool for image information security, image steganalysis has become popular in multimedia information security to researchers all over the world.The basic concept of the current image steganalysis is to analyze the embedding mechanism and the statistical changes in image data caused by embedding secret messages.Images steganalysis overcomes the binary classification problem by using the cover and stego images of two image categories.The performance of steganalysis methods depends on feature extraction, and steganalysis features are expected to have small within-class scatter distances and big between-class scatter distances.However, embedded changes are not only correlated with steganography methods but also with image content and local statistical characteristics.The changes in steganalysis features caused by secret embedding are subtle, especially when the embedding ratio is low.The contents and statistical characteristics of images have a stronger impact on the distribution of steganalysis features than the embedding process.Thus, the steganalysis features of cover and stego images are inseparable, a scenario that can be attributed to the differences in image statistical characteristics.Consequently, image steganalysis becomes a classification problem with large within-class and small between-class scatter distances.To solve this problem, a new steganalysis framework for JPEG images, which aims to reduce the within-class scatter distances, is proposed.MethodThe secret messages after embedding will have different effects on the characteristics of images with different content complexities, while the steganalysis features of the images with the same content complexity are similar.This study on image steganalysis focuses on reducing the differences of image statistical characteristics caused by various contents and processing methods.The motivation of the new model is introduced by analyzing the Fisher linear discriminant analysis, which is the basis of the ensemble classifier, the most used one in steganalysis applications, and a new steganalysis model of JPEG images based on image classification and segmentation is proposed.We define a content complexity evaluation feature for each image, and the given images are first classified according to the content.Thus, the images classified to the same sub-class will have a closer content complexity.Then, each image is segmented to several sub-images according to the evaluated texture features and the complexity of each sub-block.During segmentation, we first categorize the image blocks according to texture complexity, and then amalgamate the adjacent block categories.After the combined classification and segmentation process, the content texture of the same class of image regions is more similar, and the steganalysis features are more centralized.The steganalysis features are extracted separately from each subset with the same or close texture complexity to build a classifier.When deciding which steganalysis feature set to extract, we mainly consider the performance.In our prior work, we found that when extracting a steganalysis feature set with low dimension, the performance of the method based on classification or segmentation can be obviously improved.However, when extracting high-dimensional steganalysis features, such as JPEG rich model (JRM), the performance is unsatisfactory because the rich model is based on the residual of the given image, and it can eliminate the effect of image content.The JRM feature set is sensitive to subtle image details, and the steganalysis result is good.However, we still extract the JRM feature set, which is the most representative high-dimensional feature set in the JPEG domain, to prove the validity of the proposed model.In the testing phase, the steganalysis features of each segmented sub-image in each sub-class are sent to the corresponding classifier.The final steganalysis result is obtained through the weighted fusing process.ResultIn the experiment, we compute two kinds of separability criteria of the tested steganalysis feature set, including the separability criterion based on the within-and between-class distances and the Bhattacharyya distances.The Bhattacharyya distance is one of the most used separability criteria on the basis of the probability density of classified samples.Both separability criteria of the proposed method are obviously improved, which means that the proposed classified and segmentation-based steganalysis features can be more easily categorized, thereby verifying the validity of the proposed steganalysis model.We also compare the classification performance of the proposed method and the prior work in various experimental circumstances, including the use of the same and different training and testing image databases.We compute the detection results for the original feature set, the features extracted from the classified image and the segmented image, and the image combined classification and segmentation.Experimental results show that in both circumstances, the combined classification and segmentation process can effectively improve the performance by up to 10%.The improvement considerably higher when the training and testing images have different statistical features, which implies that the proposed method is suitable for practical application on images from the Internet with considerable diversity in sources, processing methods, and contents.ConclusionIn this paper, a new steganalysis model for JPEG images is proposed.The differences in image statistical characteristics caused by various contents and processing methods are reduced by image classification and segmentation.The JRM feature set was extracted.The theoretical analysis of and the experimental results for several diverse image databases and circumstances demonstrate the validity of the framework.When a considerable diversity in image sources and contents exists, such as different training and testing images, the performance improvement of the proposed method is obvious, indicating that the performance of the proposed method does not depending highly on image content.Furthermore, the proposed steganalysis model is suitable for practical application in complex network environments.关键词:steganalysis;image statistical characteristics;image classification;image segmentation;weighted fusing23|91|2更新时间:2024-05-07

摘要:ObjectiveImage steganalysis is the opposite technology of steganography; it aims to detect, extract, restore, and destroy secret messages embedded in cover images.As an important technical tool for image information security, image steganalysis has become popular in multimedia information security to researchers all over the world.The basic concept of the current image steganalysis is to analyze the embedding mechanism and the statistical changes in image data caused by embedding secret messages.Images steganalysis overcomes the binary classification problem by using the cover and stego images of two image categories.The performance of steganalysis methods depends on feature extraction, and steganalysis features are expected to have small within-class scatter distances and big between-class scatter distances.However, embedded changes are not only correlated with steganography methods but also with image content and local statistical characteristics.The changes in steganalysis features caused by secret embedding are subtle, especially when the embedding ratio is low.The contents and statistical characteristics of images have a stronger impact on the distribution of steganalysis features than the embedding process.Thus, the steganalysis features of cover and stego images are inseparable, a scenario that can be attributed to the differences in image statistical characteristics.Consequently, image steganalysis becomes a classification problem with large within-class and small between-class scatter distances.To solve this problem, a new steganalysis framework for JPEG images, which aims to reduce the within-class scatter distances, is proposed.MethodThe secret messages after embedding will have different effects on the characteristics of images with different content complexities, while the steganalysis features of the images with the same content complexity are similar.This study on image steganalysis focuses on reducing the differences of image statistical characteristics caused by various contents and processing methods.The motivation of the new model is introduced by analyzing the Fisher linear discriminant analysis, which is the basis of the ensemble classifier, the most used one in steganalysis applications, and a new steganalysis model of JPEG images based on image classification and segmentation is proposed.We define a content complexity evaluation feature for each image, and the given images are first classified according to the content.Thus, the images classified to the same sub-class will have a closer content complexity.Then, each image is segmented to several sub-images according to the evaluated texture features and the complexity of each sub-block.During segmentation, we first categorize the image blocks according to texture complexity, and then amalgamate the adjacent block categories.After the combined classification and segmentation process, the content texture of the same class of image regions is more similar, and the steganalysis features are more centralized.The steganalysis features are extracted separately from each subset with the same or close texture complexity to build a classifier.When deciding which steganalysis feature set to extract, we mainly consider the performance.In our prior work, we found that when extracting a steganalysis feature set with low dimension, the performance of the method based on classification or segmentation can be obviously improved.However, when extracting high-dimensional steganalysis features, such as JPEG rich model (JRM), the performance is unsatisfactory because the rich model is based on the residual of the given image, and it can eliminate the effect of image content.The JRM feature set is sensitive to subtle image details, and the steganalysis result is good.However, we still extract the JRM feature set, which is the most representative high-dimensional feature set in the JPEG domain, to prove the validity of the proposed model.In the testing phase, the steganalysis features of each segmented sub-image in each sub-class are sent to the corresponding classifier.The final steganalysis result is obtained through the weighted fusing process.ResultIn the experiment, we compute two kinds of separability criteria of the tested steganalysis feature set, including the separability criterion based on the within-and between-class distances and the Bhattacharyya distances.The Bhattacharyya distance is one of the most used separability criteria on the basis of the probability density of classified samples.Both separability criteria of the proposed method are obviously improved, which means that the proposed classified and segmentation-based steganalysis features can be more easily categorized, thereby verifying the validity of the proposed steganalysis model.We also compare the classification performance of the proposed method and the prior work in various experimental circumstances, including the use of the same and different training and testing image databases.We compute the detection results for the original feature set, the features extracted from the classified image and the segmented image, and the image combined classification and segmentation.Experimental results show that in both circumstances, the combined classification and segmentation process can effectively improve the performance by up to 10%.The improvement considerably higher when the training and testing images have different statistical features, which implies that the proposed method is suitable for practical application on images from the Internet with considerable diversity in sources, processing methods, and contents.ConclusionIn this paper, a new steganalysis model for JPEG images is proposed.The differences in image statistical characteristics caused by various contents and processing methods are reduced by image classification and segmentation.The JRM feature set was extracted.The theoretical analysis of and the experimental results for several diverse image databases and circumstances demonstrate the validity of the framework.When a considerable diversity in image sources and contents exists, such as different training and testing images, the performance improvement of the proposed method is obvious, indicating that the performance of the proposed method does not depending highly on image content.Furthermore, the proposed steganalysis model is suitable for practical application in complex network environments.关键词:steganalysis;image statistical characteristics;image classification;image segmentation;weighted fusing23|91|2更新时间:2024-05-07 -

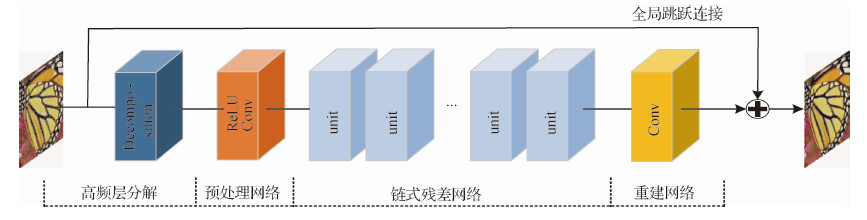

摘要:Objective Image denoising is a classical image reconstruction problem in low-level computer vision.It estimates the latent clean image from a noisy one.Digital images are often affected by the noise caused by imaging equipment and external environment in the process of digitization and transmission.Although several methods have achieved reasonable results in recent years, they rarely mentioned the over-smoothing effects and the loss of edge details.Thus, a novel image denoising method via residual learning based on edge enhancement is proposed.Method Recently, due to its powerful learning ability, very deep convolutional neural network has been widely used for image restoration.Inspired by ResNet, unlike other direct denoising networks, identity mappings are introduced to enable our residual network to increase the depth, and then slightly modify the architecture to adapt better to the denoising task.Pooling layers and batch normalization are removed to preserve details.Instead of these, high-frequency layer decomposition and global skip connection are used to prevent over-fitting.They change the input and output of the network to reduce the solution space.To speed up the training process, we select the rectified linear unit (ReLU) as the activation function and remove it before the convolution layer.Traditionally, image restoration work used the per-pixel loss between the ground truth and the restored image as the optimization target to obtain excellent quantitative scores.However, in recent research, minimizing pixel-wise errors only on the basis of low-level pixels has proven prone to loss of details and smoothens the results.Meanwhile, the perceptual loss function has shown that it can generate high-quality images with a better visual performance by capturing the difference between the high-level feature representations, but it sometimes fails to preserve color and local spatial information.To combine both benefits, we propose a new joint loss function that consists of a normal pixel-to-pixel loss and a perceptual loss with appropriate weights.In summary, the flow of our method is described as follows.First, the high-frequency layer of the noisy image is used as the input by removing the background information.Then, a residual mapping is trained to predict the difference between clean and noisy images as output instead of the final denoised image.The denoised result is improved further, and a joint loss function is defined as the weighted sum of the pixel-to-pixel Euclidean and perceptual losses.A well-trained convolutional neural network is connected to learn the semantic information, which we will likely measure in our perceptual loss.This setup encourages the train process to learn similar feature representations rather than match each low-level pixel, which can guide the front denoising network in reconstructing more edges and details.Unlike normal denoising models for only one specific noise level, our single model can deal with the noise of unknown levels (i.e., blind denoising).We employ CBSD400 as the training set and evaluate the quality in Set5, Set14, and CBSD100 with noise levels of 15, 25, and 50, respectively.To train the network for a specific noise level, we generate the noisy images by adding Gaussian noise with standard deviations of σ=15, 25, 50.Alternatively, we train a single blind network for the unknown noise range [1,50].Result To verify the effectiveness of the proposed network, we show the quantitative and qualitative results of our method in comparison to those of state-of-the-art methods, including BM3D, TNRD, and DnCNN.The performance of the algorithm is evaluated by the peak signal-to-noise ratio as the quantitative indicator.Results show that the proposed network training with MSE loss can solely produce the best index results.The proposed algorithm (MSE-S) is better by 0.63 dB、0.55 dB and 0.17 dB compared with BM3D, TNRD, and DnCNN, respectively.In the qualitative visual sense, the perceptual loss model proposed in this paper clearly achieves a clearer denoising result.Compared with the fuzzy regions generated by other methods, this method preserves more edge information and texture details.We perform another experiment to show the ability of blind denoising.The input is composed of noisy parts with three levels, 10, 30, and 50.Results indicate that our blind model can generate a satisfactory restored output without artifacts even when the input is corrupted by several levels of noise in different parts.Conclusion In this paper, we describe a deep residual denoising network of 26 weight layers where perceptual loss is adopted to enhance the information detail.Residual learning and high-frequency layer decomposition are used to reduce the solution space to speed up the training process without pooling layers and batch normalization.Unlike the normal denoising model for only one specific noise level, our new model can deal with blind denoising problems with different unknown noise levels.The experiments show that the proposed network achieves superior performances both in quantitative and qualitative results, and recovers majority of the missing details from low-quality observations.In the future, we will explore how to handle other kinds of noise, especially the complex real-world noise, and consider a single comprehensive network for more image restoration tasks.In addition, we will likely focus on researching more visually perceptible indicators in addition to PSNR.关键词:image denoising;residual learning;perceptual loss;hierarchical mode29|11|12更新时间:2024-05-07

摘要:Objective Image denoising is a classical image reconstruction problem in low-level computer vision.It estimates the latent clean image from a noisy one.Digital images are often affected by the noise caused by imaging equipment and external environment in the process of digitization and transmission.Although several methods have achieved reasonable results in recent years, they rarely mentioned the over-smoothing effects and the loss of edge details.Thus, a novel image denoising method via residual learning based on edge enhancement is proposed.Method Recently, due to its powerful learning ability, very deep convolutional neural network has been widely used for image restoration.Inspired by ResNet, unlike other direct denoising networks, identity mappings are introduced to enable our residual network to increase the depth, and then slightly modify the architecture to adapt better to the denoising task.Pooling layers and batch normalization are removed to preserve details.Instead of these, high-frequency layer decomposition and global skip connection are used to prevent over-fitting.They change the input and output of the network to reduce the solution space.To speed up the training process, we select the rectified linear unit (ReLU) as the activation function and remove it before the convolution layer.Traditionally, image restoration work used the per-pixel loss between the ground truth and the restored image as the optimization target to obtain excellent quantitative scores.However, in recent research, minimizing pixel-wise errors only on the basis of low-level pixels has proven prone to loss of details and smoothens the results.Meanwhile, the perceptual loss function has shown that it can generate high-quality images with a better visual performance by capturing the difference between the high-level feature representations, but it sometimes fails to preserve color and local spatial information.To combine both benefits, we propose a new joint loss function that consists of a normal pixel-to-pixel loss and a perceptual loss with appropriate weights.In summary, the flow of our method is described as follows.First, the high-frequency layer of the noisy image is used as the input by removing the background information.Then, a residual mapping is trained to predict the difference between clean and noisy images as output instead of the final denoised image.The denoised result is improved further, and a joint loss function is defined as the weighted sum of the pixel-to-pixel Euclidean and perceptual losses.A well-trained convolutional neural network is connected to learn the semantic information, which we will likely measure in our perceptual loss.This setup encourages the train process to learn similar feature representations rather than match each low-level pixel, which can guide the front denoising network in reconstructing more edges and details.Unlike normal denoising models for only one specific noise level, our single model can deal with the noise of unknown levels (i.e., blind denoising).We employ CBSD400 as the training set and evaluate the quality in Set5, Set14, and CBSD100 with noise levels of 15, 25, and 50, respectively.To train the network for a specific noise level, we generate the noisy images by adding Gaussian noise with standard deviations of σ=15, 25, 50.Alternatively, we train a single blind network for the unknown noise range [1,50].Result To verify the effectiveness of the proposed network, we show the quantitative and qualitative results of our method in comparison to those of state-of-the-art methods, including BM3D, TNRD, and DnCNN.The performance of the algorithm is evaluated by the peak signal-to-noise ratio as the quantitative indicator.Results show that the proposed network training with MSE loss can solely produce the best index results.The proposed algorithm (MSE-S) is better by 0.63 dB、0.55 dB and 0.17 dB compared with BM3D, TNRD, and DnCNN, respectively.In the qualitative visual sense, the perceptual loss model proposed in this paper clearly achieves a clearer denoising result.Compared with the fuzzy regions generated by other methods, this method preserves more edge information and texture details.We perform another experiment to show the ability of blind denoising.The input is composed of noisy parts with three levels, 10, 30, and 50.Results indicate that our blind model can generate a satisfactory restored output without artifacts even when the input is corrupted by several levels of noise in different parts.Conclusion In this paper, we describe a deep residual denoising network of 26 weight layers where perceptual loss is adopted to enhance the information detail.Residual learning and high-frequency layer decomposition are used to reduce the solution space to speed up the training process without pooling layers and batch normalization.Unlike the normal denoising model for only one specific noise level, our new model can deal with blind denoising problems with different unknown noise levels.The experiments show that the proposed network achieves superior performances both in quantitative and qualitative results, and recovers majority of the missing details from low-quality observations.In the future, we will explore how to handle other kinds of noise, especially the complex real-world noise, and consider a single comprehensive network for more image restoration tasks.In addition, we will likely focus on researching more visually perceptible indicators in addition to PSNR.关键词:image denoising;residual learning;perceptual loss;hierarchical mode29|11|12更新时间:2024-05-07 -

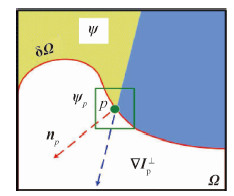

摘要:ObjectiveThe rapid development of multimedia information technology has led to images that have become the main carrier of information in people's lives.People communicate information through various means, such as voice, images, text, and video.Consequently, digital image inpainting technology has gradually attracted increasing attention, and its application fields are extensive.Digital image inpainting refers to the process of repairing or rebuilding missing information in damaged images by using a specific image inpainting algorithm, such that the observer cannot easily detect that the image has been repaired or damaged.Image inpainting technology has been used in many research areas, such as the restoration of old photos, the removal of image text, and the preservation of cultural relics.The traditional exemplar-based image inpainting algorithm only uses gradient information and the color information of the image to repair damaged areas, which can easily generate incorrect filling patches.In addition, the definition of the priority function is unreasonable, and thus causes the wrong filling order during inpainting, which affects the overall restoration effect.To solve these problems, an improved color image inpainting algorithm that is based on structure tensor is presented in this paper.Method Structure tensor is often used to analyze the local geometry of an image, which not only contains the intensity information of the local region, but also the main directions of the neighborhood gradient of a pixel and the degree of coherence of these directions.Its two eigenvalues can distinguish the edge, texture, and flat areas of an image.First, the proposed algorithm uses the structure tensor to define the data items to ensure that the structure information of the image can be transmitted accurately, and then uses the data items to form a new priority function for a more precise filling order.Second, different sizes of sample patches can be used to search for the best matching patch because an image has different structural features in different regions.Therefore, the size of the sample patch is adaptively selected according to the average coherence of the structure tensor.In other words, when the average coherence of the patch to be repaired is large, this patch is at the edge of the image and a small sample patch should be used; when the average coherence is small, it is in the flat region of the image and a large sample patch should be used.In this manner, when repairing complex damaged images, the continuity of the edge structure can be maintained; the flat area of the image can be effectively repaired.Finally, the traditional inpainting algorithm only uses the color information of the image to find the best matching patch, which renders the matching patch sub optimal.In this study, the eigenvalues of the structure tensor are added to the matching criteria to reduce the false matching rate.ResultExperimental results show that the improved algorithm is more effective in subjective vision than the other related algorithms.Moreover, the improved algorithm can achieve good results for different types of damaged images and effectively maintain the smoothness of the edge structure of the image.Compared with the traditional Criminisi algorithm, the power signal-to-noise ratio of the result has improved by approximately 1~3 dB and enhanced structure similarity.In addition, the proposed algorithm has a higher running time than other algorithms because in the inpainting process, the proposed algorithm uses the adaptive sample patch size to search for the best matching patch, and when analyzing the local structural features of the image, it needs to calculate the coherence factor of the pixels.These steps consequently increase the running time and reduce the efficiency of image inpainting.Conclusion When the traditional algorithm repairs the strong damaged area of the edge, the structural integrity and the good visual effect of the image are difficult to balance.In this study, we use the structure tensor of the color image to analyze the structure and texture area of the image.This paper discusses a color image inpainting algorithm based on the structure tensor.The proposed algorithm first uses the eigenvalue of the structure tensor instead of the isophote line in the traditional algorithm to improve the data items, which can spread the structure information of the image more accurately.Then, the average coherence factor of the structure tensor is used to analyze the texture and structural features of the image to repair the different image structural features.Finally, the matching rate used to select the best matching patch is improved with the addition of the constraint of the structural tensor to the traditional matching criteria.The proposed algorithm can obtain a better visual effect for damaged images with different structural features.The proposed algorithm can also effectively maintain the structural integrity of the image, and the complex texture area does not exhibit a wrong filled patch.Moreover, the large object and text removals by the proposed algorithm also have a good restoration effect.Compared with related Criminisi algorithms, the proposed algorithm has a better repair effect on complex linear structure and texture regions and effectively improves the overall quality of image restoration.关键词:color image restoration;structure tensor;adaptive patch size;matching criterion;the average coherence13|5|4更新时间:2024-05-07

摘要:ObjectiveThe rapid development of multimedia information technology has led to images that have become the main carrier of information in people's lives.People communicate information through various means, such as voice, images, text, and video.Consequently, digital image inpainting technology has gradually attracted increasing attention, and its application fields are extensive.Digital image inpainting refers to the process of repairing or rebuilding missing information in damaged images by using a specific image inpainting algorithm, such that the observer cannot easily detect that the image has been repaired or damaged.Image inpainting technology has been used in many research areas, such as the restoration of old photos, the removal of image text, and the preservation of cultural relics.The traditional exemplar-based image inpainting algorithm only uses gradient information and the color information of the image to repair damaged areas, which can easily generate incorrect filling patches.In addition, the definition of the priority function is unreasonable, and thus causes the wrong filling order during inpainting, which affects the overall restoration effect.To solve these problems, an improved color image inpainting algorithm that is based on structure tensor is presented in this paper.Method Structure tensor is often used to analyze the local geometry of an image, which not only contains the intensity information of the local region, but also the main directions of the neighborhood gradient of a pixel and the degree of coherence of these directions.Its two eigenvalues can distinguish the edge, texture, and flat areas of an image.First, the proposed algorithm uses the structure tensor to define the data items to ensure that the structure information of the image can be transmitted accurately, and then uses the data items to form a new priority function for a more precise filling order.Second, different sizes of sample patches can be used to search for the best matching patch because an image has different structural features in different regions.Therefore, the size of the sample patch is adaptively selected according to the average coherence of the structure tensor.In other words, when the average coherence of the patch to be repaired is large, this patch is at the edge of the image and a small sample patch should be used; when the average coherence is small, it is in the flat region of the image and a large sample patch should be used.In this manner, when repairing complex damaged images, the continuity of the edge structure can be maintained; the flat area of the image can be effectively repaired.Finally, the traditional inpainting algorithm only uses the color information of the image to find the best matching patch, which renders the matching patch sub optimal.In this study, the eigenvalues of the structure tensor are added to the matching criteria to reduce the false matching rate.ResultExperimental results show that the improved algorithm is more effective in subjective vision than the other related algorithms.Moreover, the improved algorithm can achieve good results for different types of damaged images and effectively maintain the smoothness of the edge structure of the image.Compared with the traditional Criminisi algorithm, the power signal-to-noise ratio of the result has improved by approximately 1~3 dB and enhanced structure similarity.In addition, the proposed algorithm has a higher running time than other algorithms because in the inpainting process, the proposed algorithm uses the adaptive sample patch size to search for the best matching patch, and when analyzing the local structural features of the image, it needs to calculate the coherence factor of the pixels.These steps consequently increase the running time and reduce the efficiency of image inpainting.Conclusion When the traditional algorithm repairs the strong damaged area of the edge, the structural integrity and the good visual effect of the image are difficult to balance.In this study, we use the structure tensor of the color image to analyze the structure and texture area of the image.This paper discusses a color image inpainting algorithm based on the structure tensor.The proposed algorithm first uses the eigenvalue of the structure tensor instead of the isophote line in the traditional algorithm to improve the data items, which can spread the structure information of the image more accurately.Then, the average coherence factor of the structure tensor is used to analyze the texture and structural features of the image to repair the different image structural features.Finally, the matching rate used to select the best matching patch is improved with the addition of the constraint of the structural tensor to the traditional matching criteria.The proposed algorithm can obtain a better visual effect for damaged images with different structural features.The proposed algorithm can also effectively maintain the structural integrity of the image, and the complex texture area does not exhibit a wrong filled patch.Moreover, the large object and text removals by the proposed algorithm also have a good restoration effect.Compared with related Criminisi algorithms, the proposed algorithm has a better repair effect on complex linear structure and texture regions and effectively improves the overall quality of image restoration.关键词:color image restoration;structure tensor;adaptive patch size;matching criterion;the average coherence13|5|4更新时间:2024-05-07 -

摘要:Objective Depth map plays an increasingly important role in many computer vision applications, such as 3D reconstruction, augmented reality, and gesture recognition.A new generation of active 3D range sensors, such as Microsoft Kinect camera, enables the acquisition of a real-time and affordable depth map.Incidentally, unlike natural images captured by RGB sensors, the depth maps captured by range sensors typically have low resolution (LR) and inaccurate edges due to their intrinsic physical constraints.Given that an accurate and high-resolution (HR) depth map is required and preferable in many applications, excellent depth map super-resolution (SR) techniques are desirable.Depth map SR can be generally addressed by two different types of approaches that depend on the use of input data.For single depth map SR, the resolution of the input depth map can be enhanced based on the information learned with from a pre-collected training database.Meanwhile, depth map SR algorithms that use RGB-D data can be further classified into MRF and filtering-based approaches.MRF-based methods view depth map SR as an optimization problem.Filtering-based methods obtain the weighted average of local depth map pixels for SR purposes.These methods aim to obtain a smooth HR depth map for regions belonging to the same object.However, these methods have two main issues:1) the inaccurate edges of the depth map cannot be fully refined and 2) the edges of the HR depth map suffer from blurring.In this paper, a novel texture edge-guided depth reconstruction approach is proposed to address the issue of existing methods.We pay more attention to the depth edge refinement, which is usually ignored by existing methods.Method In the first stage, an initial HR depth map is obtained by general up-sampling methods, such as interpolation and filters.Then, initial depth edges are extracted from the initial HR depth map by using many edge detectors for edge detection, such as Sobel and Canny.The edges extracted directly from the initial HR depth map are not the true edges because the misalignment between the LR depth map edges and the texture edges and the up-sampling operation can cause further edge errors.Subsequently, the texture edges are extracted from the color image.Traditional approaches for edge detection do not consider the visually salient edges; the texture edges and illusory contours are all taken as image edges.Moreover, many edges of the color image do not correspond to depth edges, such as the edges inside the object.Inspired by the advanced positive result of the vision field, we propose a depth map edge detection method based on the structured forest.The edge map of the color image is initially extracted by using the recently structured learning approach.By incorporating the 3D space information provided by the initial HR depth map, the texture edges of the objects inside are removed.Then, we obtain a clear and true depth edge map.Finally, the depth values on each side of the depth edge are refined to align the depth edges and correct the depth errors in the initial HR depth map.We detect the incorrect depth regions between the initial depth edges and the corresponding true depth edges and then fill the incorrect regions until the depth edges are consistent with the corresponding color image.The incorrect regions of initial HR depth map are refined by the joint bilateral filter in an outside-inward refining order that is regularized by the detected true depth edges.Result We perform experiments on the NYU dataset, which offers real-world color-depth image pairs that were captured by a Kinect camera.To evaluate the performance of our proposed method, we compare our results with two method categories:1) state-of-the-art single depth image super resolution methods (ScSR, PB, and E.G.) and 2) state-of-the-art color-guided depth map super resolution approaches (JBU, GIU, MRF, WMF, and JTU).We implement most of these methods by using the same parameter settings as provided in the corresponding papers.We down-sample the original depth maps into LR ones and perform SR.We evaluate our proposed method with the recovered HR depth map and the reconstructed point clouds.The recovered HR depth maps indicate that our proposed methods generate more visually appealing results than the compared approaches.The boundaries in our results are generally sharper and smoother along the edge direction, whereas the compared methods suffer from blurred artifacts around the boundaries.To demonstrate further the effectiveness of our proposed approach, we provide the 3D point clouds constructed from the up-scaled depth map with different methods.Results indicate that our proposed method yields a relatively clear foreground and background, while the competing results suffer from obvious flying pixels and aliased planes.Conclusion We present a novel method for depth map SR for Kinect depth.Experimental results demonstrate that the proposed method provides sharp and clear edges for the Kinect depth, and the depth edges are aligned with the texture edges.The proposed framework synthesizes an HR depth map given its LR depth map and corresponding HR color image.Our proposed method first estimates the initial HR depth map via traditional up-sampling approaches, then extracts the true edges of the RGB-D data and the fake edges of the initial HR depth map to identify the incorrect regions between the two edges.The incorrect regions of the initial HR depth maps are further refined by joint bilateral filter in an outside-inward refining order to align the edges of color image and depth map.The key to our success is the use of RGB-D depth edge detection, which is inspired by the structured forests-based edge detection.Besides, unlike most depth enhancement methods that use raster-scan order to fill incorrect regions, our method can determine the filing order by considering the true edges.Thus, our HR depth map output exhibits better quality with clear and aligned depth edges compared with the existing depth map SR.However, texture-based guidance may result in incorrect depth value due to the smooth object surface with rich color texture.Thus, the suppression of texture copying artifacts may be our next research goal.关键词:depth map;Kinect;super-resolution reconstruction;edge detection;texture-guided46|6|4更新时间:2024-05-07

摘要:Objective Depth map plays an increasingly important role in many computer vision applications, such as 3D reconstruction, augmented reality, and gesture recognition.A new generation of active 3D range sensors, such as Microsoft Kinect camera, enables the acquisition of a real-time and affordable depth map.Incidentally, unlike natural images captured by RGB sensors, the depth maps captured by range sensors typically have low resolution (LR) and inaccurate edges due to their intrinsic physical constraints.Given that an accurate and high-resolution (HR) depth map is required and preferable in many applications, excellent depth map super-resolution (SR) techniques are desirable.Depth map SR can be generally addressed by two different types of approaches that depend on the use of input data.For single depth map SR, the resolution of the input depth map can be enhanced based on the information learned with from a pre-collected training database.Meanwhile, depth map SR algorithms that use RGB-D data can be further classified into MRF and filtering-based approaches.MRF-based methods view depth map SR as an optimization problem.Filtering-based methods obtain the weighted average of local depth map pixels for SR purposes.These methods aim to obtain a smooth HR depth map for regions belonging to the same object.However, these methods have two main issues:1) the inaccurate edges of the depth map cannot be fully refined and 2) the edges of the HR depth map suffer from blurring.In this paper, a novel texture edge-guided depth reconstruction approach is proposed to address the issue of existing methods.We pay more attention to the depth edge refinement, which is usually ignored by existing methods.Method In the first stage, an initial HR depth map is obtained by general up-sampling methods, such as interpolation and filters.Then, initial depth edges are extracted from the initial HR depth map by using many edge detectors for edge detection, such as Sobel and Canny.The edges extracted directly from the initial HR depth map are not the true edges because the misalignment between the LR depth map edges and the texture edges and the up-sampling operation can cause further edge errors.Subsequently, the texture edges are extracted from the color image.Traditional approaches for edge detection do not consider the visually salient edges; the texture edges and illusory contours are all taken as image edges.Moreover, many edges of the color image do not correspond to depth edges, such as the edges inside the object.Inspired by the advanced positive result of the vision field, we propose a depth map edge detection method based on the structured forest.The edge map of the color image is initially extracted by using the recently structured learning approach.By incorporating the 3D space information provided by the initial HR depth map, the texture edges of the objects inside are removed.Then, we obtain a clear and true depth edge map.Finally, the depth values on each side of the depth edge are refined to align the depth edges and correct the depth errors in the initial HR depth map.We detect the incorrect depth regions between the initial depth edges and the corresponding true depth edges and then fill the incorrect regions until the depth edges are consistent with the corresponding color image.The incorrect regions of initial HR depth map are refined by the joint bilateral filter in an outside-inward refining order that is regularized by the detected true depth edges.Result We perform experiments on the NYU dataset, which offers real-world color-depth image pairs that were captured by a Kinect camera.To evaluate the performance of our proposed method, we compare our results with two method categories:1) state-of-the-art single depth image super resolution methods (ScSR, PB, and E.G.) and 2) state-of-the-art color-guided depth map super resolution approaches (JBU, GIU, MRF, WMF, and JTU).We implement most of these methods by using the same parameter settings as provided in the corresponding papers.We down-sample the original depth maps into LR ones and perform SR.We evaluate our proposed method with the recovered HR depth map and the reconstructed point clouds.The recovered HR depth maps indicate that our proposed methods generate more visually appealing results than the compared approaches.The boundaries in our results are generally sharper and smoother along the edge direction, whereas the compared methods suffer from blurred artifacts around the boundaries.To demonstrate further the effectiveness of our proposed approach, we provide the 3D point clouds constructed from the up-scaled depth map with different methods.Results indicate that our proposed method yields a relatively clear foreground and background, while the competing results suffer from obvious flying pixels and aliased planes.Conclusion We present a novel method for depth map SR for Kinect depth.Experimental results demonstrate that the proposed method provides sharp and clear edges for the Kinect depth, and the depth edges are aligned with the texture edges.The proposed framework synthesizes an HR depth map given its LR depth map and corresponding HR color image.Our proposed method first estimates the initial HR depth map via traditional up-sampling approaches, then extracts the true edges of the RGB-D data and the fake edges of the initial HR depth map to identify the incorrect regions between the two edges.The incorrect regions of the initial HR depth maps are further refined by joint bilateral filter in an outside-inward refining order to align the edges of color image and depth map.The key to our success is the use of RGB-D depth edge detection, which is inspired by the structured forests-based edge detection.Besides, unlike most depth enhancement methods that use raster-scan order to fill incorrect regions, our method can determine the filing order by considering the true edges.Thus, our HR depth map output exhibits better quality with clear and aligned depth edges compared with the existing depth map SR.However, texture-based guidance may result in incorrect depth value due to the smooth object surface with rich color texture.Thus, the suppression of texture copying artifacts may be our next research goal.关键词:depth map;Kinect;super-resolution reconstruction;edge detection;texture-guided46|6|4更新时间:2024-05-07 -