多残差联合学习的水下图像增强

摘 要

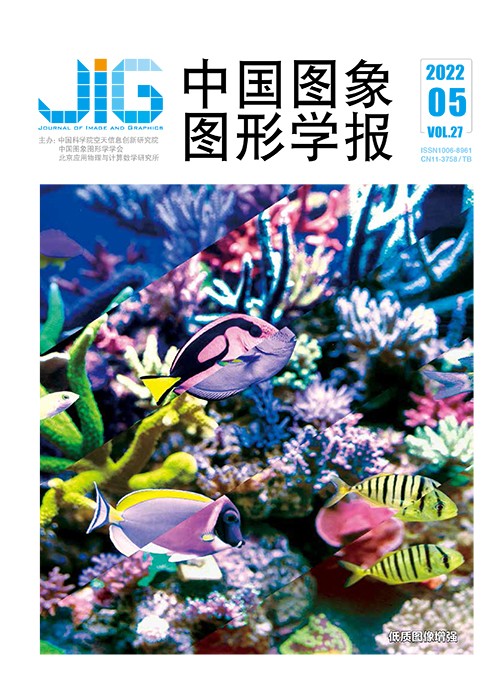

目的 由于海水中悬浮的颗粒会吸收和散射光,并且不同波长的光在海水中的衰减程度也不同,使得水下机器人拍摄的图像呈现出对比度低、颜色失真等问题。为解决上述问题以呈现出自然清晰的水下图像,本文提出了基于神经网络的多残差联合学习的方法来对水下图像进行增强。方法 该方法包括3个模块:预处理、特征提取和特征融合。首先,采用Sigmoid校正方法对原始图像的对比度进行预处理,增强失真图像的对比度,得到校正后的图像;然后,采用双分支网络对特征进行提取,将原始图像送入分支1——残差通道注意分支网络,将校正后的图像与原始图像级联送入分支2——残差卷积增强分支网络。其中,通道注意分支在残差密集块中嵌入了通道注意力模型,通过对不同通道的特征重新进行加权分配,以加强有用特征;卷积增强分支通过密集级联和残差学习,提取校正图像中边缘等高频信息以保持原始结构与边缘。最后,在特征融合部分,将以上双分支网络的特征级联后,通过残差学习进一步增强;增强后的特征与分支1的输出、分支1与分支2的输出分别经过自适应掩膜进行再次融合。选取通用UIEB(underwater image benchmark dataset)数据集中的800幅水下图像作为训练集进行训练,设计了结合图像内容感知、均方误差、梯度和结构相似性的联合损失函数对网络进行端到端训练。结果 在通用UIEB数据集上选取非训练集中有参考图像的90幅图像与当前10种方法进行测试。结果显示,本文方法增强后的水下图像的PSNR(peak signal-to-noise ratio)指标平均达到了20.739 4 dB,SSIM(structural similarity)为0.876 8,UIQM(underwater image quality measure)为3.183 3,均高于对比方法。结论 本文方法不仅在客观质量上超越了对比方法,且在主观质量上也显著提高了对比度,能够产生颜色丰富并且清晰度较高的增强图像。尤其是对于深水场景中偏蓝的水下图像,本文方法获得显著的质量提升。

关键词

Joint multi-residual learning for underwater image enhancement

Chen Long, Ding Dandan(School of Information Science and Technology, Hangzhou Normal University, Hangzhou 311121, China) Abstract

Objective Suspended particles will absorb and scatter light in seawater. It tends to be low contrast and intensive color distortion due to the attenuated multi-wavelengths for underwater robots based images capturing. Deep learning based underwater image enhancement is facilitated to resolve this issue of unclear acquired images recently. Compared to the traditional methods, convolutional neural network(CNN) based underwater image processing has its priority in quality improvement qualitatively and quantitatively. A basic-based method generates its relevant high-quality images via a low-quality underwater image processing. The single network is challenging to obtain multi-dimensional features. For instance, the color is intensively biased in some CNN-based methods and the enhanced images are sometimes over-smoothed, which will have great impact on the following computer vision tasks like objective detection and segmentation. Some networks utilize the depth maps to enhance the underwater images, but it is a challenge to obtain accurate depth maps. Method A multi-residual learning network is demonstrated to enhance underwater images. Our framework is composed of 3 modules in the context of pre-processing, feature extraction, and feature fusion. In the pre-processing module, the sigmoid correction is handled to pre-process the contrast of the degraded image, producing a strong contrast modified image. Next, a two-branch network is designated for feature extraction. The degraded image is fed into branch 1, i.e., the residual channel attention branch network where channel attention modules are incorporated to emphasize the efficient features. Meanwhile, the modified image and the degraded image are concatenated and transferred to branch 2, i.e., the residual enhancement branch network where structures and edges of the images are preserved and enhanced based on stacked residual blocks and dense connection. Finally, the feature fusion module conducts generated features collection for further fusion. The generated features penetrate into several residual blocks for enhancement based on feature extraction modules. An adaptive mask is then applied to the enhanced features and the outputs of branch 1. Similarly, the outputs of branch 1 and branch 2 are also processed by the adaptive mask. At the end, all results from the masks are added for final image reconstruction. To train our network, we introduce a loss function that includes content perception, mean square error, gradient, and structural similarity (SSIM) for end-to-end training. Result We use 800 images as the training dataset based on the regular underwater image benchmark dataset (UIEB) and 90 UIEB-based referenced images as the test dataset. Our proposed scheme is tailored to underwater image enhancement methods, including the model-free methods, the physical model-based methods, as well as the deep learning-based methods. Two reference indexes including peak signal-to-noise ratio (PSNR) and SSIM, and a non-reference index underwater image quality measure (UIQM) are employed to evaluate the enhancement results. The demonstrated results illustrate that our method achieves averaged PSNR of 20.739 4 dB, averaged SSIM of 0.876 8, and averaged UIQM of 3.183 3. Specifically, our method plays well in UIQM, which is an essential underwater image quality measurement index and reflects the color, sharpness, and contrast of underwater images straightforward. Moreover, our method achieves the smallest standard deviation of all compared methods, indicating the robustness of our proposed method. Conclusion We visualize the enhanced underwater images for qualitative evaluation. Our analyzed results improve the color and contrast and preserve rich textures for the enhanced images with no over-exposing and over-smoothing effect. Our method is beneficial to blueish images quality improvement in deep water scenarios.

Keywords

convolutional neural network(CNN) dense concatenation network underwater image enhancement feature extraction channel attention

|

中国图象图形学报 │ 京ICP备05080539号-4 │ 本系统由

中国图象图形学报 │ 京ICP备05080539号-4 │ 本系统由